How well do we really understand the temporal I/O behavior of HPC applications — and why does it matter?

Read the extended version of this paper “A Deep Look Into the Temporal I/O Behavior of HPC Applications” here

Exa-DoST is proud to share its new publication at IPDPS 2025: A Deep Look Into the Temporal I/O Behavior of HPC Applications.

In collaboration between Inria (France), TU Darmstadt (Germany), and LNCC (Brazil), Francieli Boito, Luan Teylo, Mihail Popov, Theo Jolivel, François Tessier, Jakob Luettgau, Julien Monniot, Ahmad Tarraf, André Carneiro, and Carla Osthoff present a large-scale study of temporal I/O behavior in high-performance computing (HPC), based on more than 440,000 traces collected over 11 years from four large HPC systems.

Understanding temporal I/O behavior is critical to improving the performance of HPC applications, particularly as the gap between compute and I/O speeds continues to widen. Many existing techniques—such as burst buffer allocation, I/O scheduling, and batch job coordination—depend on assumptions about this behavior. This work examines fundamental questions about the temporality, periodicity, and concurrency of I/O behavior in real-world workloads. By analyzing traces from both system and application perspectives, we provide a detailed characterization of how HPC applications interact with the I/O subsystem over time.

Key contributions include:

- A classification of recurring temporal I/O patterns across diverse workloads.

- Insights into I/O concurrency and shared resource usage.

- Public release of the used large datasets to support further research.

Our findings offer a solid empirical foundation for future developments in behavior-aware monitoring, I/O scheduling, and performance modeling.

Read the full version on HAL.

Photo credit Francieli Boito

2025 InPEx workshop

Find all the presentation on InPEx website here

From April 14th to 17th, 2025, the InPEx global network of experts (Europe, Japan and USA) gathered in Kanagawa, Japan. Hosted by RIKEN-CSS and Japanese universities with the support of NumPEx, the InPEx 2025 workshop was dedicated to the challenges of the post-Exascale era.

Find all NumPEx contributions below:

- Introduction, with Jean-Yves Berthou, Inria director of NumPEx and representative for Europe

-

AI and HPC: Sharing AI-centric benchmarks of hybrid workflows

Co-chaired by Jean-Pierre Vilotte (CNRS) -

Software Production and Management

Co-chaired by Julien Bigot (CEA) -

AI and HPC: Generative AI for Science

Co-chaired by Alfredo Buttari (IRIT) and Thomas Moreau (Inria) -

Digital Continuum and Data Management

Co-chaired by Gabriel Antoniu (Inria)

If you want to know more, all presentations are available on InPEx website.

Photo credit: Corentin Lefevre/Neovia Innovation/Inria

NumPEx holds its first General Assembly

Bringing together 130 researchers, engineers, and partners at Inria Saclay, the 2025 NumPEx General Assembly was a key step for the future of NumPEx.

Over two days, participants engaged in discussions, workshops, and guest talks to explore the challenges of integrating Exascale computing into a broader digital continuum. The first day was marked by the live announcement that France had been selected to host one of the European AI Factories.

This General Assembly was also the perfect occasion to introduce YoungPEx to the entire PEPR community through a presentation and one of its first workshop. YoungPEx is a new initiative aimed at fostering collaboration among young researchers, including PhD students, post-docs, engineers, and volunteer permanent researchers. It will serve as a dynamic platform for networking, knowledge exchange, and interdisciplinary collaboration across the HPC and AI communities.

We were also pleased to welcome the TRACCS and Cloud research programs, which presented both ongoing and potential collaborations with NumPEx.

With this first General Assembly, NumPEx strengthens its community and continues its paths to Exascale and beyond.

© PEPR NumPEx

The 2025 annual meeting of Exa-MA

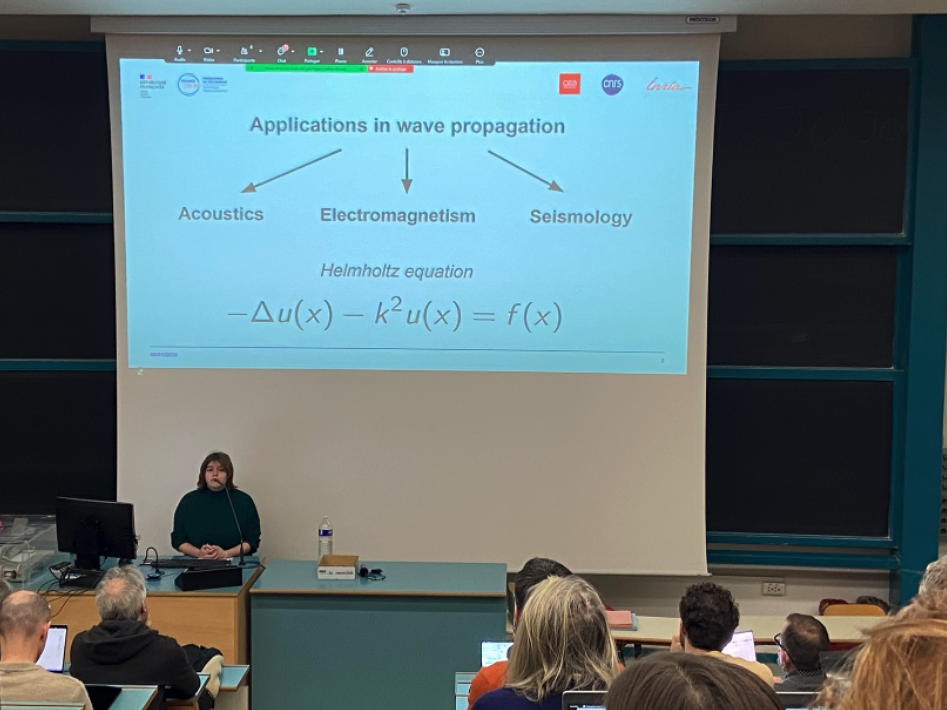

The General Assembly of the Exa-MA project took place at the University of Strasbourg on January 14-15, 2025. This two-day event was an opportunity to share the latest advancements of the project, strengthen collaborations between Work Packages (WPs), and discuss the next steps.

Exa-MA aims to revolutionize methods and algorithms for exascale scaling: discretization, resolution, learning and order reduction, inverse problem, optimization and uncertainties. We are contributing to the software stack of future European computers.

The General Assembly gathered 83 participants, including members of the Scientific Board, external partners, and young recruits, with 15 joining online. The event featured plenary sessions, thematic workshops, and strategic discussions focused on intra- and inter-project synergies, particularly with Exa-Soft and Exa-DI. Key topics included AI, accelerators, software sustainability, and external collaborations. Strengthening ties with academic and industrial partners was also a major focus, reinforcing Exa-MA’s role in the broader exascale computing ecosystem.

Tuesday, 14 January 2025

- Welcome and General Presentations

by Hélène Barucq, Inria research scientist

and Christophe Prud’homme, professor at Université de Strasbourg - Highlighting the New Recruits

- Hung Truong, Inria postdoctoral researcher

- Céline Van Landeghem, phD student at Université de Strasbourg

- Thomas Saigre, Université de Strasbourg researcher

- Christos Giorgadis, Inria postdoctoral researcher

- Daria Hrebenshchykova, phD student at Université Côte d’Azur

- Mahamat Nassouradine, CEA phD student

- Transverse working group on AI

by Emmanuel Franck, Inria research scientist - Highlighting the New Recruits

- Antoine Simon, CEA research engineer

- Sébastien Dubois, CEA research engineer

- Alexandre Hoffmann, Inria phD student

- General workshop on interactions within the project

by Mark Asch, professor at Université de Picardie

Luc Giraud, Inria research scientist

Jean-Pierre Vilotte, CNRS research scientist - Highlighting the New Recruits

- Alexandre Pugin, Inria phD student

- Hélène Hénon, phD student at Université Grenoble Alpes

- Hassan Ballout, phD student at Université de Strasbourg

- Presentation of the NumPEx transverse working group on accelerators (GPUs)

by Philippe Helluy, professor at Université de Strasbourg - Highlighting the New Recruits

- Pierre Dubois, CEA phD student

- Amaury Bélières, pHD student at Université de Strasbourg

- Private meeting of the scientific board

Wenesday, 15 January 2025

- General workshop on interactions with external partners

by Hélène Barucq and Christophe Prud’homme - Workshop on WP1 and WP3 : discretization and solvers

by Isabelle Ramière, CEA research scientist

Pierre Alliez, Inria research scientist,

Hélène Barucq and Vincent Faucher, CEA research scientist - Workshop on WP2 and WP5 : optimization and AI

by El Ghazali Talbi, professor at Université de Lille

Stéphane Lanteri, Inria research scientist

and Emmanuel Franck - Workshop on WP4 and WP6 : uncertainty quantification + inverse problems & data assimilation

Josselin Garnier, professor at École polytechnique

Clément Gauchy, CEA research staff member

Arthur Vidard, Inria research scientist

Hélène Barucq and Florian Faucher, Inria research scientist - Feedback on workshop 1

by Isabelle Ramière, Vincent Faucher and Pierre Alliez - Feedback on workshop 2

by El Ghazali Talbi - Feedback on workshop 3

by Josselin Garnier and Arthur Vidard - Conclusion

by Christophe Prud’homme - Informal exchanges

Attendees

- Pierre Alliez, Inria

- Ani Anciaux Sedrakian, IFPEN (Online)

- Mark Asch, Université de Picardie

- Hassan Ballout, Université de Strasbourg

- Helene Barucq, Inria

- Amaury Bélières–Frendo, Université de Strasbourg

- Anne Benoit, ENS

- Jerome Bobin, CEA

- Matthieu Boileau, Université de Strasbourg

- Jérôme Bonelle, EDF

- Jed Brown, University of Colorado (Online)

- Ansar Calloo, CEA

- Vincent Chabannes, Université de Strasbourg

- Xinye Chen, Sorbonne Université (Online)

- Aurelien Citrain, Inria

- Javier Andrés Cladellas Herodier, Université de Strasbourg

- Susanne Claus, ONERA

- Raphaël Côte, Université de Strasbourg

- Clémentine Courtès, Université de Strasbourg

- Grégoire Danoy, Université de Luxembourg

- Etienne Decossin, EDF

- Romain Denefle, EDF

- Pierre Dubois, CEA

- Sébastien Dubois, École Polytechnique

- Talbi El-ghazali, Université de Lille

- Vincent Faucher, CEA

- Florian Faucher, Inria

- Emmanuel Franck, Inria

- Josselin Garnier, École Polytechnique

- Clément Gauchy, CEA

- Christos Georgiadis, Inria

- Christophe Geuzaine, Université de Liège (Online)

- Mathieu Goron, CEA (Online)

- Loic Gouarin, École Polytechnique

- Denis Gueyffier, ONERA (Online)

- Philippe Helluy, Université de Strasbourg

- Hélène Hénon, Inria

- Jan Hesthaven, Karlsruhe Institute of Technology (Online)

- Alexandre Hoffmann, École Polytechnique

- Daria Hrebenshchykova, Inria

- Bertrand Iooss, EDF

- Fabienne Jézéquel, Université Paris-Panthéon-Assas (Online)

- Pierre Jolivet, CNRS (Online)

- Baptiste Kerleguer, CEA

- Mickael Krajecki, Université de Reims

- Catherine Lambert, CERFACS

- Pierre Ledac, CEA

- Patrick Lemoine, Université de Strasbourg

- Sebastien Loriot, Geometry Factory

- Giraud Luc, Inria

- Nassouradine Mahamat Hamdan, CEA

- Bahia Maoili, ANR (Online)

- Marc Massot, École Polytechnique

- Pierre Matalon, École Polytechnique

- Loïs McInnes, Argonne National Laboratory (Online)

- Katherine Mer-Nkonga, CEA (Online)

- Victor Michel-Dansac, Inria

- Axel Modave, ENSTA (Online)

- Vincent Mouysset, ONERA

- Frédéric Nataf, Sorbonne Université

- Laurent Navoret, Université de Strasbourg

- Lars Nerger, Alfred Wegener Institute

- Augustin Parret-Fréaud, Safran

- Lucas Pernollet, CEA

- Raphaël Prat, CEA

- Clémentine Prieur, Université de Grenoble (Online)

- Christophe Prud’homme, Université de Strasbourg

- Alexandre Pugin, Inria

- Isabelle Ramiere, CEA

- Thomas Saigre, Université de Strasbourg

- Eric Savin, ONERA

- Antoine Simon, École Polytechnique

- Bruno Sudret, ETH Zürich

- Nicolas Tardieu, EDF

- Isabelle Terrasse, Airbus

- Sébastien Tordeux, Inria

- Pierre-Henri Tournier, Sorbonne Université

- Christophe Trophime, CNRS

- Hung Truong, Université de Strasbourg

- Céline Van Landeghem, Université de Strasbourg

- Arthur Vidard, Inria

- Jean-Pierre Vilotte, CNRS

- Raphael Zanella, Sorbonne Université (Online)

© PEPR NumPEx

NumPEx newsletter n°2 - 2025 with NumPEx!

Redirection vers la newsletter... Si rien ne se passe, cliquez ici.

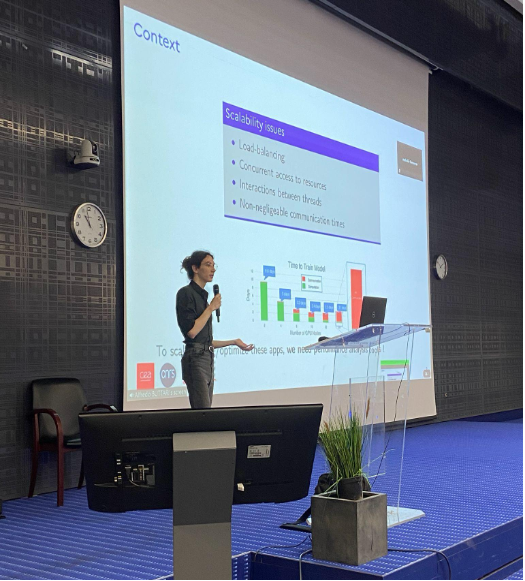

The 2024 annual meeting of Exa-Soft

The second Exa-SofT annual assembly, held on November 7th-8th, 2024 at ENSEEIHT in Toulouse, brought together over 50 participants from academia and industry to share progress on scientific computing applications, strengthen collaborations, and introduce the new members of Exa-SofT.

Thursday, 07 November 2024

- Opening Talk

by Raymond Namyst, professor at University of Bordeaux

and Alfredo Buttari, CNRS research scientist - WP1 Scientific presentation – COMET: from dynamic data-parallel dataflows to task graphs

by Jerry Lacmou Zeutouo, associate professor at IUT Amiens

and Christian Perez, Inria research scientist - WP4 Scientific presentation – Tensor computations

Atte Torri, PhD student at Paris-Saclay University - General workshop – Exa-SofT software stack consolidation

animated by Abdou Guermouche, associate professor at University of Bordeaux

and Christian Perez - WP2 & WP1 Scientific presentation – Polyhedral model for Kokkos code optimization

by Ugo Battiston, Inria PhD student - WP3 Scientific presentation – Recursive tasks

by Thomas Morin, PhD student at University of Bordeaux - WP2 Scientific presentation – Automatic multi-versioning of computation kernels

by Raphaël Colin, Inria PhD student under the supervision of Philippe Clauss and Thierry Gautier - WP4 & WP6 Scientific presentation – Improving energy efficiency of HPC application

by Albert d’Aviau de Piolant, PhD student at University of Bordeaux

Friday, 8 November 2024

- NumPEx Energy working group feedback

by Georges Da Costa, professor at University of Toulouse

and Amina Guermouche, associate professor at Bordeaux INP - General workshop – Exa-SofT developments integration in applications

by Marc Pérache, CEA research scientist

and François Trahay, professor at Telecom SudParis - WP5 Scientific presentation – PALLAS: a generic trace format for large HPC trace analysis

by Catherine Guelque, PhD student at Telecom ParisSud - WP5 & WP6 Scientific presentation – Fine-grain energy measurement

by Jules Risse, PhD student at Telecom ParisSud - NumPEx GPU working group feedback

Samuel Thibault, professor at University of Bordeaux

Attendees

- Ugo Battinston, Inria

- Julien Bigot, CEA

- Alfredo Buttari, CNRS

- Philippe Clauss, Inria

- Henri Calandra, Total Energies

- Terry Cojean, Eviden

- Raphaël Colin, Inria

- Benoit Combemale, Inria

- Jean-Marie Couteyen, Airbus

- Albert d’Aviau de Piolant, Inria

- Georges Da Costa, Université de Toulouse

- Alexandre Denis, Inria

- Nicolas Ducarton, Inria

- Pierre Esterie, Inria

- Ewen Brune, Inria

- Mathieu Faverge, Inria

- Thierry Gautier, Inria

- David Goudin, Eviden

- Karmijn Hoogveld, Université de Toulouse

- Catherine Guelque, Telecom SudParis

- Amina Guermouche, Bordeaux INP

- Abdou Guermouche, Université de Bordeaux

- Julien Hermann, CNRS

- Jerry Lacmou Zeutouo, Université de Picardie

- Marc Pérache, CEA

- Raymond Namyst, Université de Bordeaux

- Nicolas Brieuc, Inria

- Alena Kopanicakova, Toulouse INP

- Christian Perez, Inria

- Luc Giraud, Inria

- Alejandro Estana, Université de Toulouse

- Lucas Pernollet, CEA

- Millian Poquet, Université de Toulouse

- Jules Risse, Telecom SudParis

- Julien Vanharen, Inria

- Alec Sadler, Inria

- Nicolas Renon, Université de Toulouse

- Philippe Swartvagher, Inria

- Samuel Thibault, Université de Bordeaux

- François Trahay, Telecom SudParis

- Pierre Matalon, École polytechnique

- Atte Torri, Université Paris-Saclay

- Bora Ucar, ENS

- Pierre-André Wacrenier, Université de Bordeaux

- Damien Gratadour, Université Paris Cité

- Petr Vacek, IFPEN

- Matthieu Robeyns, Université Paris-Saclay

- Nathalie Furmento, CNRS

© PEPR NumPEx

The third co-design and co-development workshop of Exa-DI on "Artificial Intelligence for HPC@Exscale"

The third co-design/co-development workshop of the Exa-DI project (Development and Integration) of the PEPR NumPEx was dedicated to “Artificial Intelligence for HPC@Exscale” targeting the two topics “Image analysis @ exascale” and “Data analysis and robust inference @ exascale”. It took place on October 2 and 3, 2024 at the Espace La Bruyère, Du Côté de la Trinité (DCT) in Paris.

This face-to-face workshop brought together, for two days, Exa-DI members, members of the other NumPEx projects (Exa-MA: Methods and Algorithms for Exascale, Exa-SofT: HPC Software and Tools, Exa-DoST: Data-oriented Software and Tools for the Exascale and Exa-AToW: Architectures and Tools for Large-Scale Workflows), Application demonstrators (ADs) from various research and industry sectors and Experts to discuss advancements and future directions for integration of Artificial Intelligence into HPC/HPDA workflows at exascale targeting the two topics, “Large image analysis” and “Data analysis and robust inference”.

This workshop is the third co-design/co-development workshops in the series whose main objective is to promote software stack co-development strategies to accelerate exascale development and performance portability of computational science and engineering applications. This workshop is a little different from the previous two in that it has a prospective character targeting the increasing importance of rapidly evolving AI-driven and AI-coupled HPC/HPDA workflows in “Large images analysis @ exascale” and “Data analysis (simulation, experiments, observation) & robust inference @ exascale”. Its main objectives are first to co-develop a shared understanding of the different modes of coupling AI into HPC/HPDA workflows, second to co-identify execution motifs most commonly found in scientific applications in order to drive the co-development of collaborative specific benchmarks or proxy apps allowing to evaluate/measure end-to-end performance of AI-coupled HPC/HPDA workflows and finally, to co-identify software components (libraries, frameworks, data communication, workflow tools, abstraction layers, programming and execution environments) to be co-developed and integrated to improve critical components and accelerate them.

Key sessions included

- Introduction and Context: Setting the stage for the workshop’s two main topics as well as presenting the GT IA, a transverse action in NumPEx.

- Attendees Self-Introduction: Allowing attendees to introduce themselves and their interests.

- Various Sessions: These sessions featured talks on the challenges to tackle and bottlenecks to overcome (execution speed, scalability, volume of data…), on the type, the format and the volume of data currently investigated, on the frameworks or programming languages currently used (e.g. python, pytorch, JAX, C++, etc..) and on the typical elementary operations performed on data.

- Discussions and Roundtables: These sessions provided opportunities for attendees to engage in discussions and share insights on the presented topics in order to determine a strategy to tackle the challenges in co-design and co-development process.

Invited speakers

- Jean-Pierre Vilotte from CNRS, member of Exa-DI, who provided the introductory context for the workshop.

- Thomas Moreau from Inria, member of Exa-DoST, presenting the GT IA, a transverse action in NumPEx.

- Tobias Liaudat from CEA, discussing fast and scalable uncertainty quantification for scientific imaging.

- Damien Gradatour from CNRS, addressing how building new brains for giant astronomical telescopes with Deep Neural Networks?

- Antoine Petiteau from CEA, discussing data analysis for observing the Universe with Graviational Waves at low frequency.

- Kevin Sanchis from Safran AI, addressing benchmarking self-supervised learning methods in remote sensing.

- Hugo Frezat from Université Paris Cité, presenting learning subgrid-scale models for turbulent rotating convection.

- Benoit Semelin from Sorbonne Université, discussing simulation-based inference with cosmological radiative hydrodynamics simulations for SKA.

- Bruno Raffin & Thomas Moreau from Inria, presenting Machine Learning based analysis of large simulation outputs in Exa-DoST.

- Julián Tachella from CNRS, presenting DeepInverse: a PyTorch library for solving inverse problems with deep learning.

- Erwan Allys from ENS-PSL, exploring generative model and component separation in limited data regime with Scattering Transform.

- François Lanusse from CNRS, discussing multimodal pre-training for Scientific Data: Towards large data models for Astrophysics. > en ligne

- Christophe Kervazo from Telecom Paris, addressing interpretable and scalable deep learning methods for imaging inverse problems.

- Eric Anterrieu from CNRS, exploring deep learning based approach in imaging radiometry by aperture synthesis and its implementation.

- Philippe Ciuciu from CEA, addressing Computational MRI in the deep learning era.

- Pascal Tremblin from CEA, characterizing patterns in HPC simulations using AI driven image recognition and categorization.

- Bruno Raffin from Inria, member of Exa-DI, presenting the Software Packaging in Exa-DI

Outcomes and impacts

Many interesting and fruitful discussions took place during this prospective workshop. These discussions allowed us first to progress in understanding the challenges and bottlenecks underpinning AI-driven HPC/HPDA workflows most commonly found in the ADs. Then, a first series of associated issues to be addressed have been identified and these issues can be gathered in two mains axes: (i) image processing of large volumes, images resulting either from simulations or from experiments and (ii) exploration of high-dimensional and multimodal parameter spaces.

One of the very interesting issues that emerged from these discussions concerns the NumPEx software stack and in particular, how could the NumPEx software stack be increased beyond support for classic AI/ML libraries (e.g. TensorFlow, PyTorch) to support concurrent real time coupled execution of AI and HPC/HPDA workflows in ways that allow the AI systems to steer or inform the HPC/HPDA task and vice versa?

A first challenge is the coexistence and communication between HPC/HPDA and AI tasks in the same workflows. This communication is mainly impaired by the difference in programming models used in HPC (i.e., C++, C; and Fortran) and AI (i.e., Python) which requires a more unified data plane management in which high-level data abstractions could be exposed and to hide from both HPC simulations and AI models the complexities of the format conversion and data storage and data storage and transport. A second challenge concerns using the insight provided by the AI models and simulations for identifying execution motifs commonly found in the ADs to guide, steer, or modify the shape of the workflow by triggering or stopping new HPC/HPDA tasks. This implies that the workflow management systems must be able to ingest and react dynamically to inputs coming from the AI models. This should drive the co-development of new libraries, frameworks or workflow tools supporting AI integration into HPC/HPDA workflows.

In addition, these discussions highlighted that an important upcoming action would be to build cross-functional collaboration between software and workflow components development and integration with the overall NumPEx technologies and streamline developer and user workflows.

It was therefore decided during this workshop the set-up of a working group addressing these different issues and allowing in fine the building of a suite of shared and well specified proxy-apps and benchmarks, with well-identified data and comparison metrics addressing these different issues. Several teams of ADs and experts have expressed their interest in participating in this working group that will be formed. A first meeting with all interested participants will be organized shortly.

Attendees

- Jean-Pierre Vilotte, CNRS and member of Exa-DI

- Valérie Brenner, CEA and member of Exa-DI

- Jérôme Bobin, CEA and member of Exa-DI

- Jérôme Charousset, CEA and member of Exa-DI

- Mark Asch, Université Picardie and member of Exa-DI

- Bruno Raffin, Inria and member of Exa-DI and Exa-DoST

- Rémi Baron, CEA and member of Exa-DI

- Karim Hasnaoui, CNRS and member of Exa-DI

- Felix Kpadonou, CEA and member of Exa-DI

- Thomas Moreau, Inria and member of Exa-DoST

- Erwan Allys, ENS-PSL and application demonstrator

- Damien Gradatour, CNRS and application demonstrator

- Antoine Petiteau, CEA and application demonstrator

- Hugo Frezat, Université Paris Cité and application demonstrator

- Alexandre Fournier, Institut de physique du globe and application demonstrator

- Tobias Liaudat, CEA

- Jonathan Kem, CEA

- Kevin Sanchis, Safran AI

- Benoit Semelin, Sorbonne Université

- Julian Tachella, CNRS

- François Lanusse, CNRS

- Christophe Kervazo, Telecom Paris

- Eric Anterrieu, CNRS

- Philippe Ciuiciu, CEA

- Pascal Tremblin, CEA

© Valérie Brenner

Brice Goglin and Samuel Thibault: HPC mapping experts awarded by the french Académie des sciences

Article originally published on Inria website here

Every November, the french Académie des sciences unveils its prestigious awards. This year, alongside Brice Goglin, the NumPEx member Samuel Thibault have just been awarded the Inria – Académie des sciences – Dassault Systèmes Innovation Prize.

Samuel Thibault is responsible for the runtime part of the Exa-SofT software stack. More specifically, he is in charge of the integration of communications with task scheduling, high-level expression of the division of computational tasks, and fault tolerance. Samuel is also co-leader of the working group Accelerator.

Learn more about his research in the article published by Inria.

Photo credit académie des sciences – Mathieu Baumer

Call for proposals "Numérique pour l’Exascale"

The NumPEx program is launching its first call for projects to support advances in high-performance computing (HPC), high-performance data analysis (HPDA) and artificial intelligence (AI). Our France 2030 research program aims to develop software capable of operating future exascale machines, and to prepare the main scientific and industrial application codes.

This call is structured around three axes:

- Emerging AI methods, algorithms and software for scientific computing and HPC for AI.

- Programming models adapted to accelerated architectures.

- Workflows for scientific data analysis, with the SKA project as a use case.

This call for projects has a budget of 4 million euros. It will fund 1 to 2 projects per axis, for a maximum duration of 48 months.

The amount of funding requested must be a minimum of 500 k€ and a maximum of 1 M€, depending on the theme of the project.

The same project manager can only be responsible for one PEPR project, including targeted projects.

Deadline for applications: April 15th, 2025 (11h00 CET).

Josselin Garnier appointed to the French Académie des Sciences

Article originally published on the french Académie des sciences website here

Josselin Garnier, professor at École Polytechnique and member of Exa-MA, is one of 18 scientists appointed to the French Académie des sciences in recognition of his significant contributions to research.

Alongside Clément Gauchy, CEA research staff member, Josselin Garnier coordinates the workpackage “Uncertainty quantification” of the Exa-MA project. The objective of this workpackage is to leverage exascale computing to implement advanced analysis methods that require a large number of executions of complex simulation codes to quantify uncertainties in these simulations and solve inverse or optimization problems in the presence of uncertainties. The work carried out aims to quantify the confidence that can be placed in the predictions derived from these simulations and to facilitate decision-making based on them.

Learn more about his career and research in the article published by the French Académie des sciences.

Photo credit Adobe Stock