Exa-DoST : Data-oriented Software and Tools for the Exascale

The advent of future Exascale supercomputers raises multiple data-related challenges.

To enable applications to fully leverage the upcoming infrastructures, a major challenge concerns the scalability of techniques used for data storage, transfer, processing and analytics.

Additional key challenges emerge from the need to adequately exploit emerging technologies for storage and processing, leading to new, more complex storage hierarchies.

Finally, it now becomes necessary to support more and more complex hybrid workflows involving at the same time simulation, analytics and learning, running at extreme scales across supercomputers interconnected to clouds and edgebased systems.

The Exa-DoST project will address most of these challenges, organized in 3 areas:

1. Scalable storage and I/O;

2. Scalable in situ processing;

3. Scalable smart analytics.

Many of these challenges have already been identified, for example by the Exascale Computing Project in the United States or by many research projects in Europe and France.

As part of the NumPEx program, Exa-DoST targets a much higher technology readiness level than previous national projects concerning the HPC software stack.

It will address the major data challenges by proposing operational solutions co-designed and validated in French and European applications.

This will allow filling the gap left by previous international projects to ensure that French and European needs are taken into account in the roadmaps for building the data-oriented Exascale software stack.

Consortium

The consortium gathers 11 core research teams, 6 associate research teams and one industrial partner. They cover the two sides of the domain of expertise required in the project. On one hand, computer science research teams bring expertise on data-related research on supercomputing infrastructures. On the other hand, computational science research teams bring expertise on the challenges faced and handled by the most advanced applications. These teams represent 12 of the major French establishments involved in the field of data handling at Exascale. Below is a brief description of the core teams.

| Core teams | Institutions |

|---|---|

| DataMove | CNRS, Grenoble-INP, Inria, Université Grenoble Alpes |

| DPTA | CEA |

| IRFM | CEA |

| JLLL | CNRS, Observatoire de la Côte d’Azur, Université Côte d’Azur |

| KerData | ENS Rennes, Inria, INSA Rennes |

| LESIA | CNRS, Observatoire de Paris, Sorbonne Université, Université Paris Cité |

| LAB | CNRS, Université de Bordeaux |

| MdlS | CEA, CNRS, Université Paris-Saclay, Université de Versailles Saint-Quentin-en-Yvelines |

| MIND | CEA, Inria |

| SANL | CEA |

| SISR | CEA |

| TADaaM | Bordeaux INP, CNRS, Inria, Université de Bordeaux |

| Associated teams | Institutions |

| CMAP | CNRS, École Polytechnique |

| IRFU | CEA |

| M2P2 laboratory | CNRS, Université Aix-Marseille |

| Soda | Inria |

| Stratify | CNRS, Grenoble-INP, Inria, Université Grenoble-Alpes |

| Thoth | CNRS, Grenoble-INP, Inria, Université Grenoble-Alpes |

| DataDirect Network (DDN) company |

DataMove

DataMove is a joint research team between CNRS, Grenoble-INP, Inria and Université Grenoble Alpes and is part of the Computer Science Laboratory of Grenoble (LIG, CNRS/Université Grenoble Alpes). Created in 2016, DataMove is today composed of 9 permanent members and about 20 PhDs, postdocs and engineers. The team’s research activity is focused on High Performance Computing. Moving data on large supercomputers is becoming a major performance bottleneck, and the situation is just worsening from one supercomputer generation to the next. The DataMove team focuses on data-aware large scale computing, investigating approaches to reduce data movements on large scale HPC machines with two main research directions: data aware scheduling algorithms for job management systems, and large scale in situ data processing.

DPTA

DPTA CEA/DAM is recognized in the HPC community for the realization of massively parallel multi-physics simulation codes. The team from the Department of Theoretical and Applied Physics (DPTA) of the DIF, led by Laurent Colombet, developed in collaboration with Bruno Raffin (director of research at Inria and DataMove member) a high-performance in situ component to a simulation code called ExaStamp, which will serve as a starting point for contributions to the Exa-DoST project dedicated to in situ processing.

IRFM

The Institute for Magnetic Fusion Research is the main magnetic fusion research laboratory in France. For more than 50 years, it has carried out research on thermonuclear magnetic fusion both experimental and theoretical. The IRFM team brings its HPC expertise to the project through the 5D gyrokinetic code GYSELA that it has been developing for 20 years through national and international collaborations with a strong interaction between physicists, mathematicians and computer scientists. With an annual consumption of 150 million hours, the team is already making intensive use of available national and European petascale resources. Because of the multi-scale physics at play and because of the duration of discharges, it is already known that ITER core-edge simulations will require exascale HPC capabilities. GYSELA will serve to build relevant illustrators for Exa-DoST, to demonstrate the benefits of Exa-DoST’s contributions (i.e., how the libraries enhanced, optimized and integrated within Exa-DoST will benefit GYSELA-based application scenarios).

JLLL

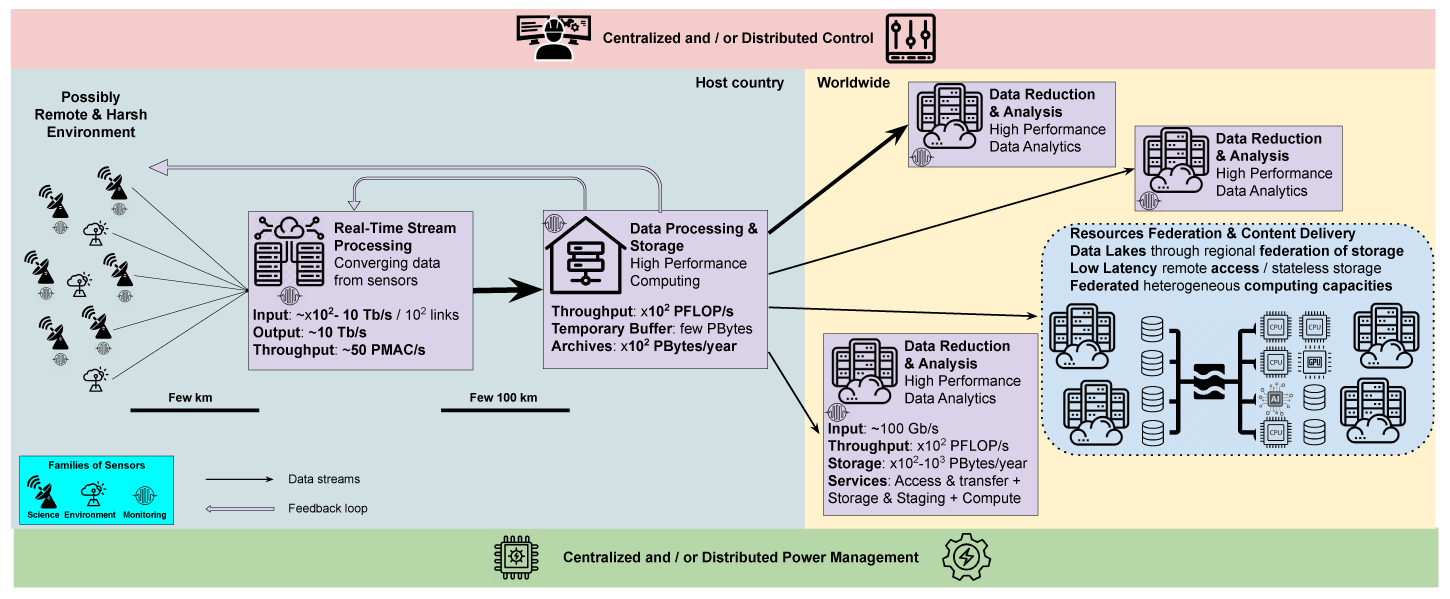

JL Lagrange Laboratory leads the French contribution to the SKA1 global observatory project, contributes to the procurement of its two supercomputers (SDP) and the establishment of SKA Regional Data Centers (SRCs). JLLL is part of the Observatoire de la Côte d’Azur (OCA, CNRS/Université Côte d’Azur), an internationally recognized center for research in Earth Sciences and Astronomy. With some 450 staff, OCA is one of 25 french astronomical observatories responsible for the continuous and systematic collection of observational data on the Earth and the Universe. Its role is to explore, understand and transfer knowledge about Earth sciences and astronomy, whether in astrophysics, geosciences, or related sciences such as mechanics, signal processing, or optics. One of the core contributions of OCA to SKA is done through the SCOOP Agile SAFe team, working on hardware/software co-design for the two SKA supercomputers.

KerData

KerData is a joint team of the Inria Center at Rennes University and of The french laboratory for research and innovation in digital science and technology (IRISA, CNRS/Université de Rennes 1). Its research is focusing on designing innovative architectures and systems for data I/O, storage and processing on extreme-scale systems: (pre-)Exascale high-performance supercomputers, cloud-based and edge-based infrastructures. In particular, it addresses the data-related requirements of new, complex applications which combine simulation, analytics and learning and require hybrid execution infrastructures (supercomputers, clouds, edge).

LESIA

The Laboratoire d’Études Spatiales en Astrophysique is strongly engaged in low-frequency radio-astronomy with important responsibilities in the SKA and it precursor NeNuFar, together with a long history of using major radio-astronomy facilities such as LOFAR and MeerKAT. LESIA is part of Observatoire de Paris (OBS.PARIS, CNRS/Université de Paris-PSL), a national research center in astronomy and astrophysics that employs approximately 1000 people (750 on permanent positions) and is the largest astronomy center in France. Beyond their unique expertise in radio-astronomy, the teams from OBS.PARIS bring strong competences in giant instruments design and construction, including associated HPC / HPDA capabilities, dedicated to astronomical data processing and reduction.

LAB

The Laboratoire d’astrophysique de Bordeaux has been carrying research & development activities for SKA since 2015 to produce detectors for the band 5 of the SKA-MID array. On-site prototype demonstration is scheduled in South Africa around mid-2024. This is currently the central and unique French contribution to the antenna/receptor hardware/firmware. In addition, LAB wishes to strengthen its contribution to SKA through relevant activities for SKA-SDP. Generally speaking, scientists and engineers at LAB have been working together on radio-astronomy science, softwares, and major instruments for several decades (ALMA, NOEMA, SKA…), producing cutting-edge scientific discoveries in astrochemistry, stellar and planetary formation, and the study of the Solar system giant planets.”

MdlS

The Maison de la Simulation is a joint laboratory between CEA, CNRS, Université Paris-Saclay and Université Versailles Saint-Quentin. It specializes in computer science for high-performance computing and numerical simulations in close connection with physical applications. The main research themes of MdlS are parallel software engineering, programming models, scientific visualization, artificial intelligence, and quantum computing.

MIND

MIND is an Inria team doing research at the intersection between statistics, machine-learning, signal processing with the ambition to impact neuroscience and neuroimaging research. Additionally MIND is supported by CEA and affiliated with NeuroSpin, the largest neuroimaging facility in France dedicated to ultra-high magnetic fields. The MIND team is a spin-off from the Parietal team, located in Inria Saclay and in CEA Saclay.

SANL

SANL is a team from the computer science department (DSSI) of CEA, led by Marc Pérache, CEA director of research, developing tools to enable simulation codes to manage inter-code and post-processing outputs on CEA supercomputers. This team is involved in IO for scientific computing code through the Hercule project. Hercule is an IO layer optimized to deal with a large amount of data at scale on large runs. Hercule manages data semantics to deal with weak code coupling through the filesystem (inter-code). We also studied in-situ analysis thanks to the research project PaDaWAn.

SISR

The SISR team at CEA is in charge of every data management activity inside the massive HPC centers hosted and managed by CEA/DIF. Those activities are quite versatile: designing and acquiring new mass storage systems, installing them, and maintaining them in operational conditions. Beyond pure system administration tasks, the SISR team develops a large framework of open-source software, provided as open-source software and dedicated to data management and mass storage. Strongly involved in R&D efforts, the SISR is a major actor in the technical and scientific collaboration between CEA and ATOS, and is part of many EuroHPC funded projects (in particular the SISR drives the IO-SEA project).

TADaaM

TADaaM is a joint research team between the University of Bordeaux, Inria, CNRS, and Bordeaux INP, part of the Laboratoire Bordelais de Recherche en Informatique (LaBRI – CNRS/Bordeaux INP/Université de Bordeaux). Its goal is to manage data at system scale by working on the way data is accessed through the storage system, transfer via high-speed network or stored in (heterogeneous) memory. To achieve this, TADaaM envisions to design and build a stateful system-wide service layer for data management in HPC systems, which should conciliate applications’ needs and system characteristics, and combine information about both to optimize and coordinate the execution of all the running applications at system scale.

Save the Date

Exa-DoST events

Discover the next Exa-DoST events: our seminars and conferences, as well as partner events

october, 2024

The Team

The Exa-DoST Team

Discover the members

Contact Us

Stay in contact with Exa-DoST

Leave us a message, we will contact you as soon as possible