The 2025 Exa-DoST general assembly

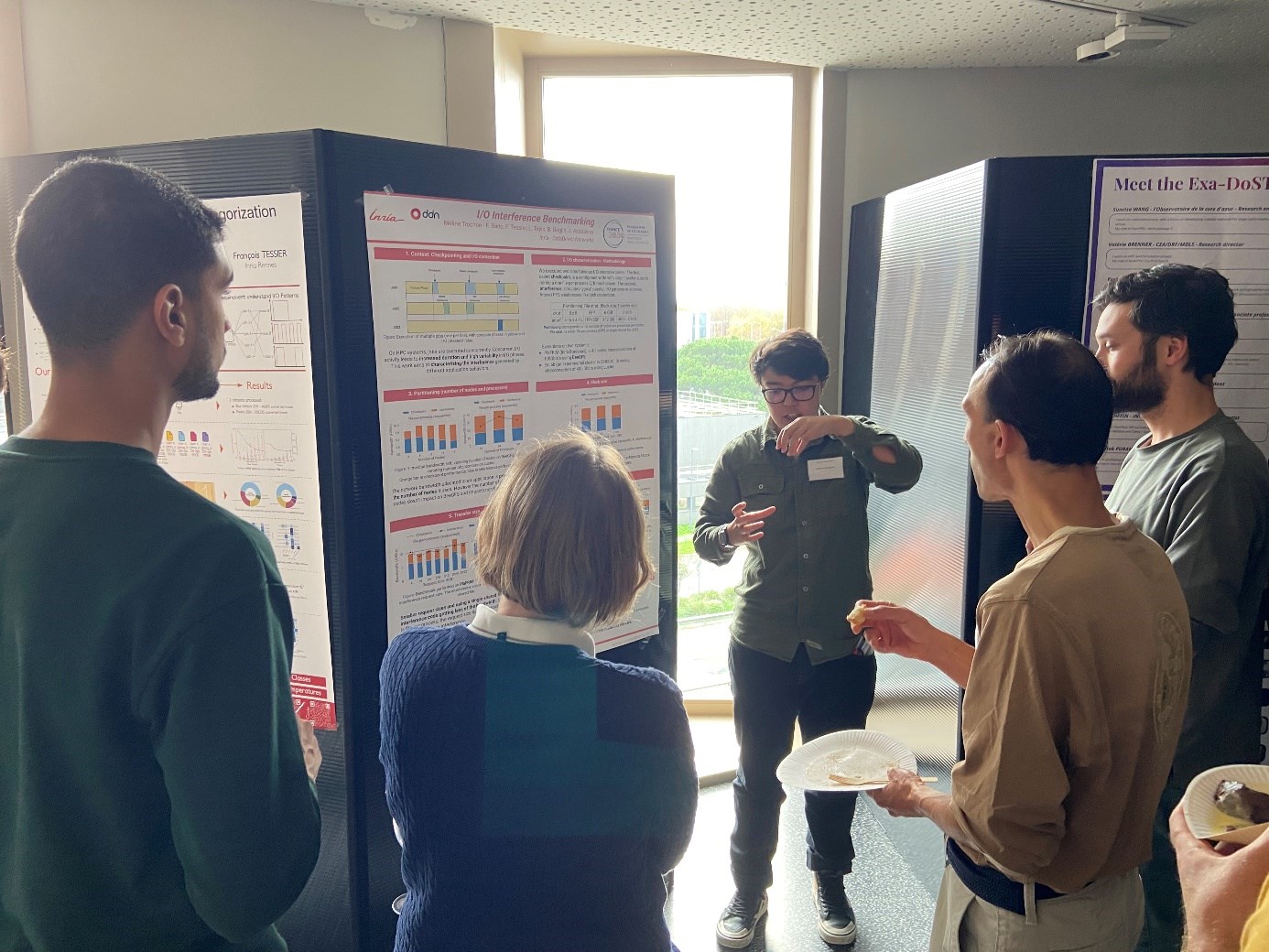

The 2025 Exa-DoST Annual Assembly took place from 5 to 7 November, 2025, bringing together 65 researchers and engineers from academia and industry to discuss the latest progress, prepare the milestones of the work packages, and welcome the latest recruits.

Exa-DoST (Data-oriented Software and Tools for the Exascale) is one of the five projects of the NumPEx program. Exa-DoST is addressing the major data challenges by proposing operational solutions co-designed and validated in French and European applications. This will allow filling the gap left by previous international projects to ensure that French and European needs are taken into account in the roadmaps for building the data-oriented Exascale software stack.

Finally, Exa-DoST was proud to welcome its new recruits, who rose brilliantly to the challenge of presenting scientific highlights in plenary sessions and via poster sessions!

Wednesday, 5 November 2025

- An introduction or refresher to NumPEx and Exa-DoST

by Gabriel Antoniu, Inria research scientist and Exa-DoST co-leader

and Julien Bigot, CEA research scientist and Exa-DoST co-leader - A few introductory words for everyone

by Gabriel Antoniu and Julien Bigot - First results and 2 scientific focuses for the workpackages:

- WP1 – I/O and data storage

by Francieli Boito, Inria research scientist and Exa-DoST WP leader

and François Tessier, Inria research scientist and Exa-DoST WP leader - WP2 – In situ data processing

by Yushan Wang, CEA research scientist and Exa-DoST WP leader

and Laurent Colombet, CEA research scientist and Exa-DoST WP leader - WP3 – ML-based data analytics

by Thomas Moreau, Inria research scientist and Exa-DoST WP leader

and Bruno Raffin, Inria research scientist and Exa-DoST WP leader - WP4:

by Virginie Grandgirard, CEA research scientist and Exa-DoST WP leader

and Damien Gratadour, Université Paris Cité professor and Exa-DoST WP leader

- WP1 – I/O and data storage

Thursday, 6 November 2025

-

Talk by François Mazen, Kitware

-

Talk by Xavier Delaruelle, TGCC

- Breakout sessions:

- Feedback on Gysela x WP1

Led by Virginie Grandgirard, Francieli Boito and François Tessier - Feedback on SKA x WP2

Led by Damien Gratadour and Yushan Wang, with the participation of Shan Mignot - Feedback on other apps (Coddex, Dyablo…) x WP3

Led by Laurent Colombet, Thomas Moreau and Brunon Raffin - Feedback on Gysela x WP2

Virginie Grandgirard, Yushan Wang and Laurent Colombet - Feedback on SKA x WP3

Led by Damien Gratadour, Thomas Moreau and Bruno Raffin - Feedback on other apps (Coddex, Dyablo…) x WP1

Led by Laurent Colombet, Francieli Boito and François Tessier

- Feedback on Gysela x WP1

Friday , 7 November 2025

-

How to approach modularity in librairy design within Exa-DoST?

by Julien Bigot - Breakout sessions:

- Feedback on Gysela x WP3

Led by Virginie Grandgirard, Thomas Moreau and Bruno Raffin - Feedback on SKA x WP1

Led by Damien Gratadour, Francieli Boito and François Tessier - Feeback on others apps (Coddex, Dyablo…) x WP2

Led by Laurent Colombet and Yushan Wang

- Feedback on Gysela x WP3

- Breakout sessions summary with all workpackages:

Attendees

- Mahamat Abdraman, Inria

- Jean-Thomas Acquaviva, DDN

- Gabriel Antoniu, Inria

- Julian AURIAC, CEA

- Rosa Maria Badia, BSC

- Alexis Bandet, Inria

- Iheb Becher, CNRS

- Mansour Benbakoura, Inria

- Andres Bermeo Marinelli, Inria

- Julien Bigot, CEA

- Jérôme Bobin, CEA

- François Bodin, Irisa

- Francieli Boito, Université de Bordeaux

- Robin Boezennec, Inria

- Etienne Bonnassieux, Université de Bordeaux

- Eric Boyer, Genci

- Valérie Brenner, CEA

- Silvina Caino-Lores, Inria

- Franck Cappello, Argonne National Laboratory, Online

- Pierre Cesar, Inria

- Jérôme Charousset, CEA

- Mathieu Cloirec, CINES

- Arnaud Collioud, Université de Bordeaux

- Laurent Colombet, CEA

- Marwane Dalal, Laboratoire d’Astrophysique de Bordeaux

- Ariel De Vora, CEA

- Xavier Delaruelle, CEA

- Arnaud Durocher, CEA

- Sofya Dymchenko, Inria

- Hugo Gaquere, Observatoire de Paris

- Virginie Grandgirard, CEA

- Damien Gratadour, Université Paris Cité

- Amina Guermouche, Inria

- Gabriel Hautreux, CINES, Online

- Hadrien Hendrikx, Inria

- Arthur Jaquard, Inria

- Théo Jolivel, Inria

- Sylvain Joube, CEA

- Ivan LUCAS, CEA

- Jakob Luettgau, Inria

- Martial Mancip, CEA

- Benoit Martin, CEA

- François Mazen, Kitware

- Yann Meurdesoif, CEA

- Shan Mignot, CNRS

- Thomas Moreau, Inria

- Jacques Morice, CEA

- Étienne Ndamlabin, Inria

- Guillaume Pallez, Inria

- Lucas Pernollet, CEA

- Abhishek Purandare, Inria

- Bruno Raffin, Inria

- Olivier Richard, Université Grenoble Alpes

- Kento Sato, Riken

- Hugo Strappazzon, Inria

- Frédéric Suter, Oak Ridge National Laboratory, Online

- François Tessier, Inria

- Samuel Thibault, Université de Bordeaux

- Luan Teylo, Inria

- Alix Tremodeux, ENS Lyon

- Méline Trochon, Inria

- Hippolyte Verninas, Inria

- Sunrise Wang, CNRS

- Yushan Wang, CEA

- Jad Yehya, Inria

©Martial Mancip / PEPR NumPEx

Exa-DI: the first mini-application resulting from co-development is now available!

Find all the information about Exa-MA here.

Following the Exa-DI general meetings, working groups were formed to produce applications on four major themes. The first mini application on high-precision discretisation is now available.

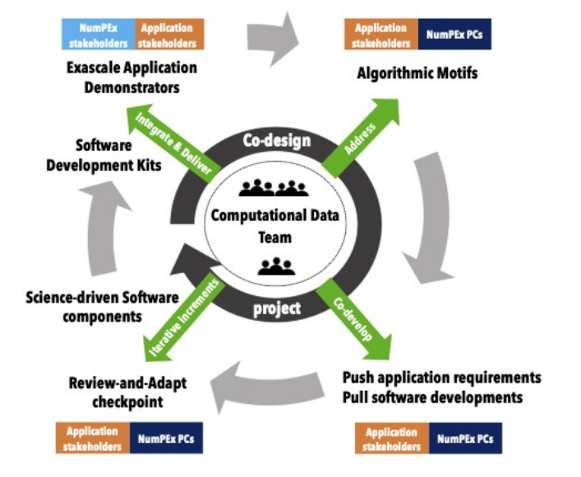

Following the Exa-DI workshops, four working groups (WGs) were formed, bringing together all the players involved in co-design and co-development: Exa-DI’s Computational and Data Science (CDT) team, members of the various targeted NumPEx projects, and application demonstration teams. These groups focus on efficient discretisation, unstructured meshes, block-structured AMR, and AI applied to linear inverse problems at exascale, and are now actively moving forward.

Thanks to these WGs, the first shared mini-applications, representative of the technical challenges of exascale applications, are currently being developed. They integrate high value-added software components (libraries, frameworks, tools) provided by other NumPEx teams. In this context, the first mini-application on high-precision discretisation is now available, with others to follow soon.

A documentation hub, set up in early 2025, is gradually centralising tutorials and technical documents of general interest for NumPEx Exa-DI. It includes: the NumPEx software catalogue, webinars and training courses, documentation on co-design and CDT packaging, and much more.

Feel free to consult it to stay up to date on the tools and resources available.

Figure: Overview of Impact-HPC.

© PEPR NumPEx

Exa-DI: Facilitating the deployment of HPC applications with Package Managers

Exa-DI is proud to present its series of training courses for users of package managers, designed to optimise their user experience.

Deploying and porting applications on supercomputers remains a complex and time-consuming task. NumPEx encourages users to leverage package managers, allowing for precise and direct control of their software stack, with a particular focus on Guix and Spack.

A series of training courses and support events has been organised to assist users:

• Tutorial: Introduction to Guix – October 2025

• Tutorial @ Compass25: Guix-deploy – June 2025

• Coding session: Publishing packages on Guix-Science – May 2025

• Tutorial: Spack for beginners (online) – April 2025

• Tutorial: Using Guix and Spack for deploying applications on supercomputers – February 2025

Switching to new deployment methods takes time. NumPEx supports users by offering training, support, software packaging, tool improvements, and partnerships with computing centres to optimise the user experience.

For more information: https://numpex-pc5.gitlabpages.inria.fr/tutorials/webinar/index.html

Photo credit: Mohammad Rahmani / Unsplash

Exa-DI: the co-design and co-development in NumPEx is moving forward

Find all the information about Exa-DI here.

The implementation of the co-design and co-development process within NumPEx is one of Exa-DI’s objectives for the production of augmented and productive software. To this end, Exa-DI has organised three working groups open to all NumPEx members.

The Exa-DI project is responsible for implementing the co-design and co-development process within NumPEx, with the aim of producing augmented and productive exascale software that is science-driven. In this context, Exa-DI has already organised three workshops: one on “Efficient discretisation for exascale EDPs”, another on “Block-structured AMR at exascale” and a third on “Artificial intelligence for exascale HPC”. These two-day in-person workshops brought together Exa-DI members, members of other NumPEx projects, teams demonstrating applications from various sectors of research and industry, and experts.

Discussions focused on:

-

- Challenges related to the co-design and co-development process

- Key issues

- The most pressing issues for collective development and strengthening links between NumPEx and applications

- Initiatives promoting the sustainability of exascale software and performance portability.

A very interesting and stimulating result was the establishment of working groups focused on a set of shared and well-specified mini-applications representing the cross-cutting computational and communication patterns identified. Several application teams have expressed interest in participating in these groups. To date, four working groups are actively engaged in the co-design and co-development of mini-applications, with a view to integrating and evaluating the logical sets of software components developed in the NumPEx projects.

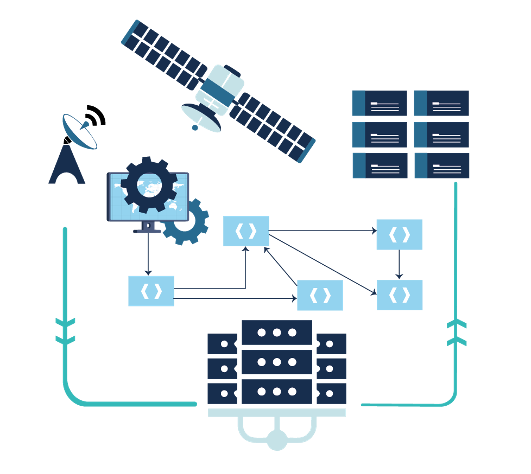

Strategy for the interoperability of digital scientific infrastructures

Find all the information about Exa-AtoW here.

The evolution of data volumes and computing capabilities is reshaping the scientific digital landscape. To fully leverage this potential, NumPEx and its partners are developing an open interoperability strategy connecting major instruments, data centers, and computing infrastructures.

Driven by data produced by large instruments (telescopes, satellites, etc.) and artificial intelligence, the digital scientific landscape is undergoing a profound transformation, fuelled by rapid advances in computing, storage and communication capabilities. The scientific potential of this inherently multidisciplinary revolution lies in the implementation of hybrid computing and processing chains, increasingly integrating HPC infrastructures, data centres and large instruments.

Anticipating the arrival of the Alice Recoque exascale machine, NumPEx’s partners and collaborators (SKA-France, MesoCloud, PEPR NumPEx, Data Terra, Climeri, TGCC, Idris, Genci) have decided to coordinate their efforts to propose interoperability solutions that will enable the deployment of processing chains that fully exploit all research infrastructures.

The aim of the work is to define an open strategy for implementing interoperability solutions, in conjunction with large scientific instruments, in order to facilitate data analysis and enhance the reproducibility of results.

Figure: Overview of Impact-HPC.

© PEPR NumPEx

Impacts-HPC: a Python library for measuring and understanding the environmental footprint of scientific computing

Find all the information about Exa-AToW here.

The environmental footprint of scientific computing goes far beyond electricity consumption. Impacts-HPC introduces a comprehensive framework to assess the full life-cycle impacts of HPC, from equipment manufacturing to energy use, through key environmental indicators.

The environmental footprint of scientific computing is often reduced to electricity consumption during execution. However, this only reflects part of the problem. Impacts-HPC aims to go beyond this limited view by also incorporating the impact of equipment manufacturing and broadening the spectrum of indicators considered.

This tool also makes it possible to trace the stages of a computing workflow and document the sources used, thereby enhancing transparency and reproducibility. In a context where the environmental crisis is forcing us to consider climate, resources and other planetary boundaries simultaneously, such tools are becoming indispensable.

The Impacts-HPC library covers several stages of the life cycle: equipment manufacturing and use. It provides users with three essential indicators:

• Primary energy (MJ): more relevant than electricity alone, as it includes conversion losses throughout the energy chain.

• Climate impact (gCO₂eq): calculated by aggregating and converting different greenhouse gases into CO₂ equivalents.

• Resource depletion (g Sb eq): reflecting the use of non-renewable resources, in particular metallic and non-metallic minerals.

This is the first time that such a tool has been offered for direct use by scientific computing communities, with an integrated and documented approach.

This library paves the way for a more detailed assessment of the environmental impacts associated with scientific computing. The next steps include integrating it into digital twin environments, adding real-time data (energy mix, storage, transfers), and testing it on a benchmark HPC centre (IDRIS).

Figure: Overview of Impact-HPC.

© PEPR NumPEx

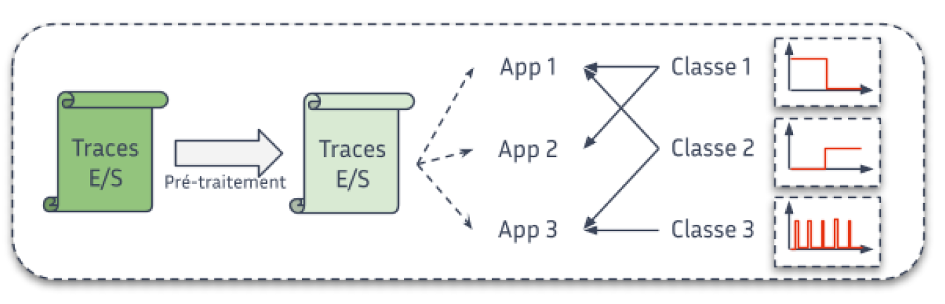

Storing massive amounts of data: better understanding for better design and optimisation

Find all the information about Exa-DoST here.

A understanding of how scientific applications read and write data is key to designing storage systems that truly meet HPC needs. Fine-grained I/O characterization helps guide both optimization strategies and the architecture of future storage infrastructures.

Data is at the heart of scientific applications, whether it be input data or processing results. For several years, data management (reading and writing, also known as I/O) has been a barrier to the large-scale deployment of these applications. In order to design more efficient storage systems capable of absorbing and optimising this I/O, it is essential to understand how applications read and write data.

Thanks to the various tools and methods we have developed, we are able to produce a detailed characterisation of the I/O behaviour of scientific applications. For example, based on supercomputer execution data, we can show that less than a quarter of applications perform regular (periodic) accesses, or that concurrent accesses to the main storage system are less common than expected.

This type of result is decisive in several respects. For example, it allows us to propose I/O optimisation methods that respond to clearly identified application behaviours. Such characterisation is also a concrete element that influences the design choices of future storage systems, always with the aim of meeting the needs of scientific applications.

Figure: Step of data classification.

© PEPR NumPEx

A new generation of linear algebra libraries for modern supercomputers

Find all the information about Exa-SofT here.

Linear algebra libraries lie at the core of scientific computing and artificial intelligence. By rethinking their execution on hybrid CPU/GPU architectures, new task-based models enable significant gains in performance, portability, and resource utilization.

Libraries for solving or manipulating linear systems are used in many fields of numerical simulation (aeronautics, energy, materials) and artificial intelligence (training). We seek to make these libraries as fast as possible on supercomputers combining traditional processors and graphics accelerators (GPUs). To do this, we use asynchronous task-based execution models that maximise the utilisation of computing units.

This is an active area of research, but most existing approaches face the difficult problem of dividing the work into the ‘right granularity’ for heterogeneous computing units. Over the last few months, we have developed several extensions to a task-based parallel programming model called STF (Sequential Task Flow), which allows complex algorithms to be implemented in a much more elegant, concise and portable way. By combining this model with dynamic and recursive work partitioning techniques, we significantly increase performance on supercomputers equipped with accelerators such as GPUs, in particular thanks to the ability to dynamically adapt the granularity of calculations according to the occupancy of the computing units. For example, thanks to this approach, we have achieved a 2x speedup compared to other state-of-the-art libraries (MAGMA, Parsec) on a hybrid CPU/GPU computer.

Linear algebra operations are often the most costly steps in many scientific computing, data analysis and deep learning applications. Therefore, any performance improvement in linear algebra libraries can potentially have a significant impact for many users of high-performance computing resources.

The proposed extensions to the STF model are generic and can also benefit many computational codes beyond the scope of linear algebra.

In the next period, we wish to study the application of this approach to linear algebra algorithms for sparse matrices as well as to multi-linear algebra algorithms (tensor calculations).

Adapting granularity allows smaller tasks to be assigned to CPUs, which will not occupy them for too long, thus avoiding delays for the rest of the machine, while continuing to assign large tasks to GPUs so that they remain efficient.

Figure: Adjusting the grain size allows smaller tasks to be assigned to CPUs, which will not take up too much of their time, thus avoiding delays for the rest of the machine, while continuing to assign large tasks to GPUs so that they remain efficient.

© PEPR NumPEx

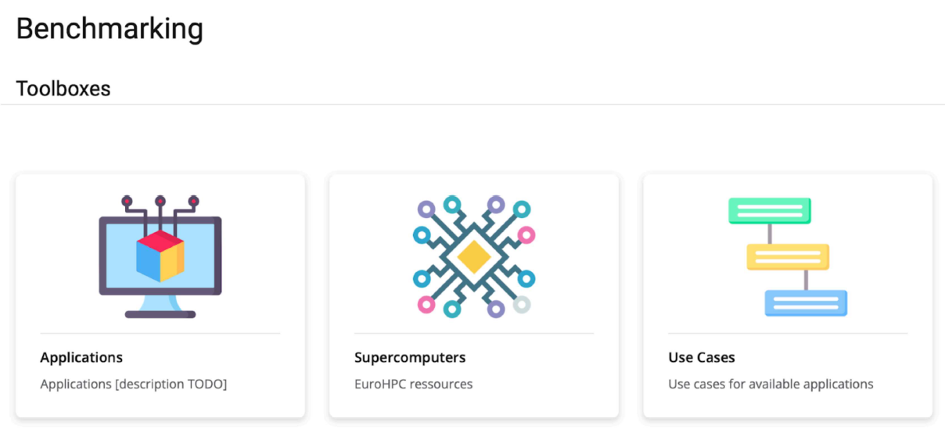

From Git repository to mass run: Exa-MA industrialises the deployment of NumPEx-compliant HPC applications

Find all the information about Exa-MA here.

By unifying workflows and automating key stages of the HPC software lifecycle, the Exa-MA framework contributes to more reliable, portable and efficient application deployment on national and EuroHPC systems.

HPC applications require reproducibility, portability and large-scale testing, but the transition from code to computer remains lengthy and heterogeneous depending on the site. The objective is to unify the Exa-MA application framework and automate builds, tests and deployments in accordance with NumPEx guidelines.

An Exa-MA application framework has been set up, integrating the management of templates, metadata and verification and validation (V&V) procedures. At the same time, a complete HPC CI/CD chain has been deployed, combining Spack, Apptainer/Singularity and automated submission via ReFrame/SLURM orchestrated by GitHub Actions. This infrastructure operates seamlessly on French national computers and EuroHPC platforms, with end-to-end automation of critical steps.

In the first use cases, the time between code validation and large-scale execution has been reduced from several days to less than 24 hours, without any manual intervention on site. Performance is now monitored by non-regression tests (high/low scalability) and will soon be enhanced by profiling artefacts.

The approach deployed is revolutionising the integration of Exa-MA applications, accelerating onboarding and ensuring controlled quality through automated testing and complete traceability.

The next phase of the project involves putting Exa-MA applications online and deploying a performance dashboard.

Figure: Benchmarking website page with views by application, by machine, and by use case.

© PEPR NumPEx

From urban data to watertight multi-layer meshes, ready for city-scale energy simulation

This highlight is based form the work of Christophe Prud'homme, Vincent Chabannes, Javier Cladellas, Pierre Alliez,

This research was carried by the Exa-MA project, in collaboration with CoE HiDALGO2, and projets Ktirio & CGAL. Find all the information about Exa-MA here.

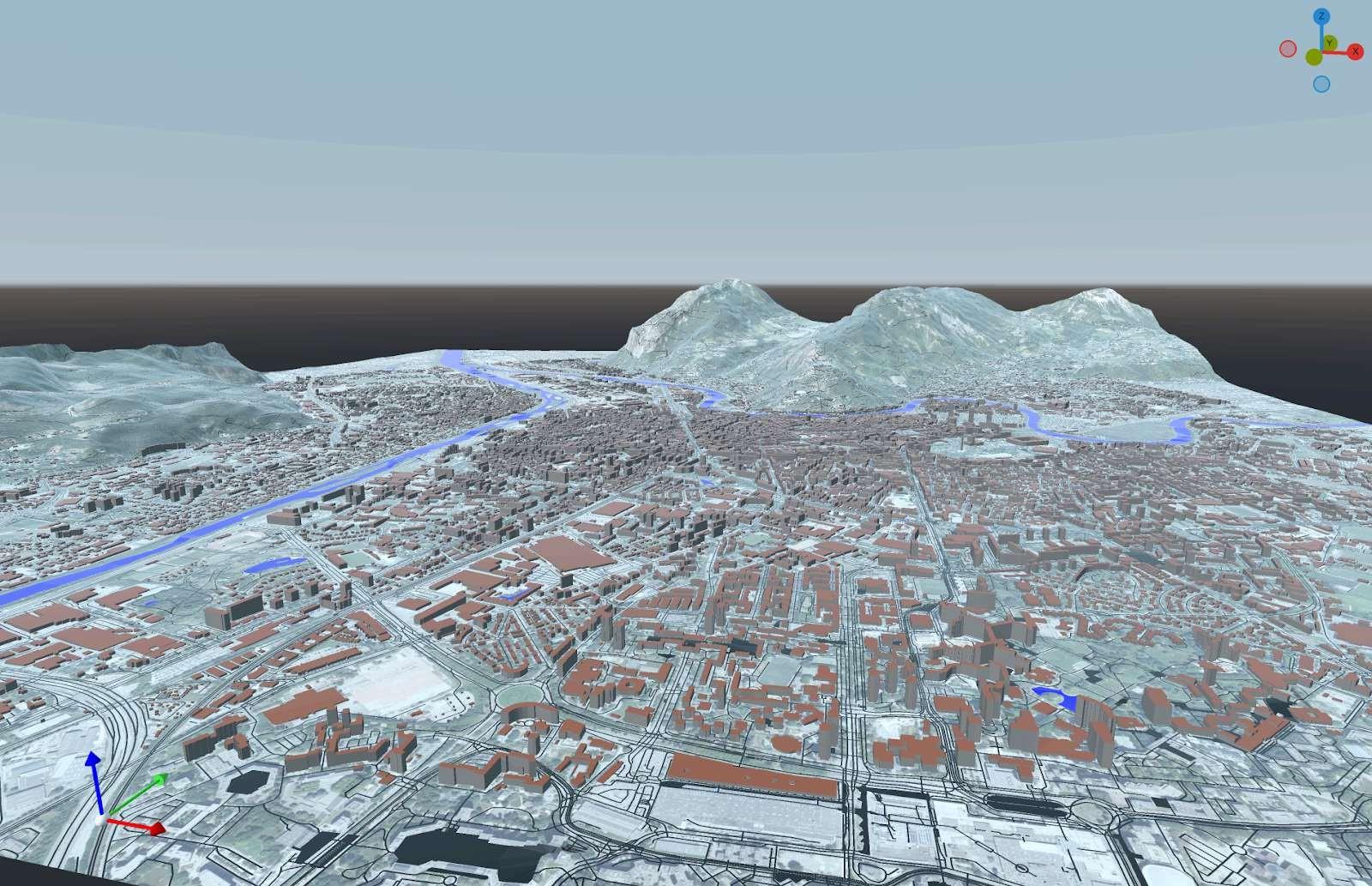

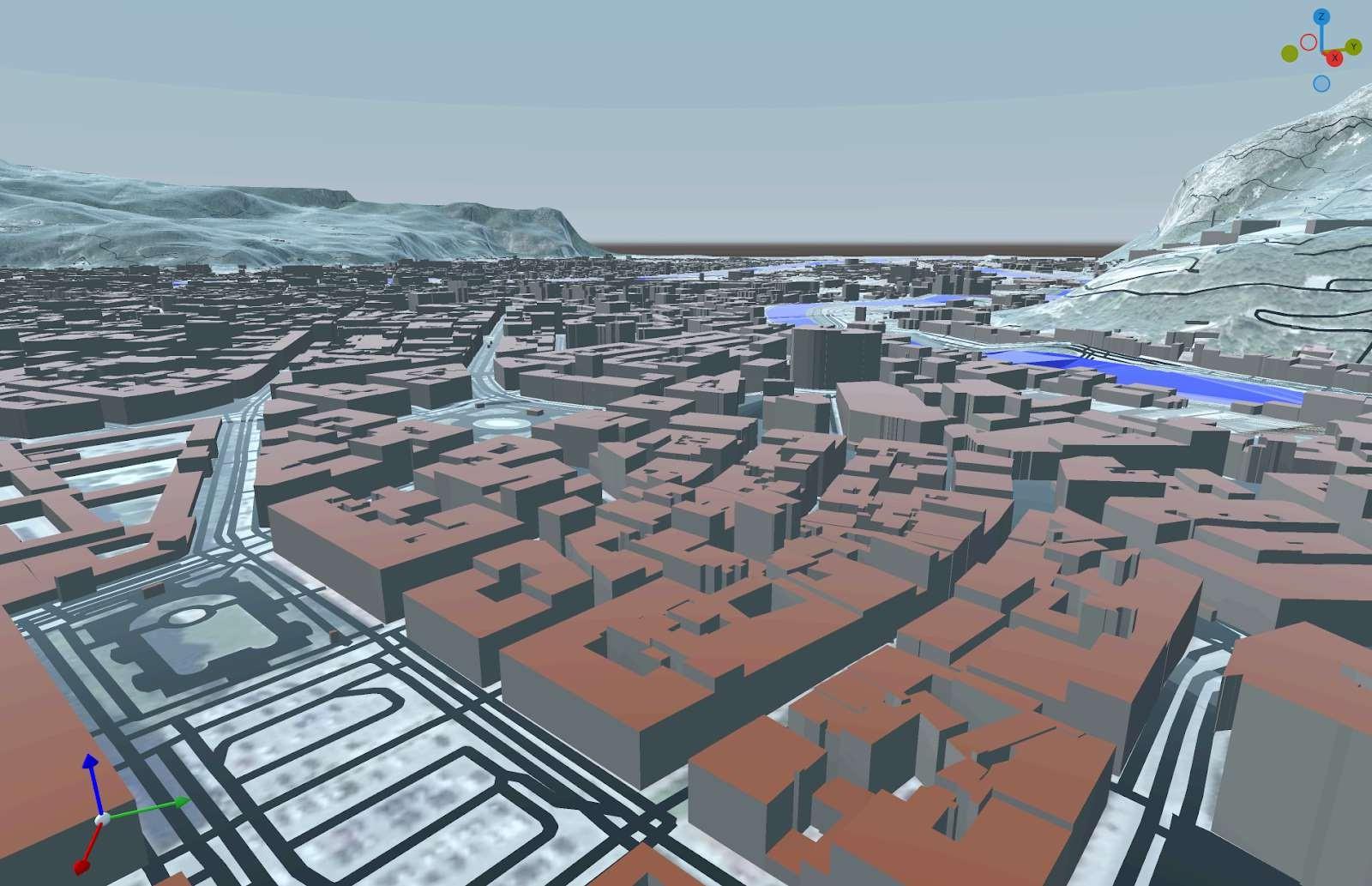

How can we model an entire city to better understand its energy, airflow, and heat dynamics? Urban data are abundant — buildings, roads, terrain, vegetation — but often inconsistent or incomplete. A new GIS–meshing pipeline now makes it possible to automatically generate watertight, simulation-ready city models, enabling realistic energy and microclimate simulations at the urban scale.

Urban energy/wind/heat modeling requires closed and consistent geometries, while the available data (buildings, roads, terrain, hydrography, vegetation) are heterogeneous and often non-watertight. The objective is therefore to reconstruct watertight urban meshes at LoD-0/1, interoperable and enriched with physical attributes and models.

A GIS–meshing pipeline has been developed to automate the generation of closed urban models. It integrates data ingestion via Mapbox, robust geometric operations using Ktirio-Geom (based on CGAL), as well as multi-layer booleans ensuring the topological closure of the scenes. Urban areas covering several square kilometers are thus converted into consistent solid LoD-1/2 models (buildings, roads, terrain, rivers, vegetation). The model preparation time is reduced from several weeks to a few minutes, with a significant gain in numerical stability.

The outputs are interoperable with the Urban Building Model (Ktirio-UBM) and compatible with energy and CFD solvers.

This development enables rapid access to realistic urban cases, usable for energy and microclimatic simulations, while promoting the sharing of datasets within the Hidalgo² Centre of Excellence ecosystem.

The next step is to publish reference datasets — watertight models and associated scripts — on the CKAN platform (n.hidalgo2.eu). These works open the way to coupling between CFD and energy simulation, and to the creation of tools dedicated to the study and reduction of urban heat islands.

Figures: Reconstruction of the city of Grenoble within a 5 km radius, including the road network, rivers and bodies of water. Vegetation has not been included in order to reduce the size of the mesh, which here consists of approximately 6 million triangles — a figure that would at least double if vegetation were included.

© PEPR NumPEx