Exa-DoST : Data-oriented Software and Tools for the Exascale

The advent of future Exascale supercomputers raises multiple data-related challenges.

To enable applications to fully leverage the upcoming infrastructures, a major challenge concerns the scalability of techniques used for data storage, transfer, processing and analytics.

Additional key challenges emerge from the need to adequately exploit emerging technologies for storage and processing, leading to new, more complex storage hierarchies.

Finally, it now becomes necessary to support more and more complex hybrid workflows involving at the same time simulation, analytics and learning, running at extreme scales across supercomputers interconnected to clouds and edgebased systems.

The Exa-DoST project will address most of these challenges, organized in 3 areas:

1. Scalable storage and I/O;

2. Scalable in situ processing;

3. Scalable smart analytics.

Many of these challenges have already been identified, for example by the Exascale Computing Project in the United States or by many research projects in Europe and France.

As part of the NumPEx program, Exa-DoST targets a much higher technology readiness level than previous national projects concerning the HPC software stack.

It will address the major data challenges by proposing operational solutions co-designed and validated in French and European applications.

This will allow filling the gap left by previous international projects to ensure that French and European needs are taken into account in the roadmaps for building the data-oriented Exascale software stack.

Software

Already contributing Exa-DoST teams: TADaaM

Maturity level: TRL 4

Demonstrated scalability: tens of I/O nodes

WPs expected to contribute: WP1

AGIOS is an I/O scheduling library developed by the TADaaM team, designed to operate at the file level. The library can be easily integrated into any I/O service that handles I/O requests, in order to enhance these services with scheduling capabilities. AGIOS has been successfully evaluated with file systems and implements many scheduling algorithms. Furthermore, the library is designed to facilitate the addition of new algorithms.

Actions expected in project

In the context of NumPEx, AGIOS will be utilized in the development of a testbed to evaluate and develop new I/O scheduling approaches. Our primary focus will be on I/O scheduling for parallel file systems and burst buffers.

Moreover, one of the main objectives is to make AGIOS an exascale-compliant library. Therefore, within the scope of NumPEx, considerable effort will be spent on the development of the library, with a focus on exascale systems and workloads.

Already contributing Exa-DoST teams: Kerdata

Maturity level: TRL 7-8

Demonstrated scalability: Yes

WPs expected to contribute: WP1, WP2, WP4

Damaris is a HPC software middleware used in MPI based simulation code and developed by the KERDATA team at Inria. Already demonstrated for scalable I/O and in-situ visualization, Damaris was experimented and validated beyond 14,000 cores on top supercomputers including Titan, Jaguar and Kraken for in situ/in transit data processing. After a bilateral project with TOTAL dedicated to the usage of Damaris for seismic codes, work in progress is now dedicated to the use of Damaris on pre-Exascale systems as part of two EuroHPC projects: ACROSS and EUPEX. Damaris will be one of the major software libraries that will be enriched and evaluated in Exa-DoST for use on the future French Exascale machine as part of the planned work in WP1 and WP2.

Actions expected in project

During the timeline of Exa-DoST, we plan to extend Damaris to support programmed and triggered based analyses. Programmed analyses are when one or more analysis schedules are set, with individual time schedules, to be performed using asynchronous processing via Damaris. A triggered analysis will be when an iterative (or programmed) analysis detects a process of interest which then launches a further more detailed analysis. The development of these methods has a current use-case within the physics domain, where a CEA code named Coddex will be used to facilitate the development of the integration, benchmark, and verify the capability. The use of GPU resources by Damaris processes is an area that will be further developed during the project.

The integration of Damaris asynchronous methods for use in other in situ frameworks, such as PDI, will be explored. This is envisioned by the development of a plugin for PDI, which will let PDI pass data through to Damaris. The use of new MPI capabilities such as MPI Sessions will be explored so as to see if the Damaris server processes can be accessed using the new MPI methods. This method of launching a job will be explored in its capacity to better co-locate Damaris resources within a heterogeneous node and its ability for the dynamic modification of the number of resources available through Damaris.

Use of other communications frameworks, such as the Mercury library, will be trialed, to see if they provide further flexibility of a simulation to use in situ methods and aid in transfer of data and methods to distributed computational resources.

Already contributing Exa-DoST teams: MdlS, DataMove

Maturity level: TRL 4

Demonstrated scalability: execution on the full Adastra machine (338 nodes × 4 AMD MI250X)

WPs expected to contribute: WP2, WP4

Deisa is a Dask-enabled in situ analytics framework. Developed at MdIS in collaboration with the DataMove team, it is a python tool based on Dask. It makes it possible to process data generated by parallel producers such as MPI simulations in Dask. Deisa offers a python interface to access the descriptor of the generated data ahead of time and build task graphs.

Already contributing Exa-DoST teams: TADaaM

Maturity level: TRL 2 – Technology Concept Formulated

Demonstrated scalability: Tests have not been conducted yet

WPs expected to contribute: WP1

The I/O Performance Evaluation Suite (IOPS) is a tool being developed by the TADaaM team to simplify the process of benchmark execution and results analysis in storage systems. It utilizes IOR, a microbenchmark for I/O, to conduct a series of experiments with various parameters. The goal of the tool is to automate the I/O performance evaluation process, as described in Boito et al. [1]. In this study, the authors explored the impact of several parameters on I/O performance, such as the number of nodes, processes, and file sizes, to find a configuration that achieves the system’s peak performance. Subsequently, these parameters were used to investigate the impact of the number of OSTs. IOPS is designed to execute the same analysis in an automatic and more efficient way, reducing the number of executions necessary to characterize the I/O performance of the entire HPC system.

Actions expected in project

IOPS will be developed alongside the NUMPEX project. Currently, the tool has a first version that performs a static characterization: it executes a brute force search by evaluating each parameter individually. The idea is to develop a smart tool that makes decisions on the evaluation of the next parameters based on the results of the previous ones. For this purpose, we plan to use Bayesian Optimization to reduce the number of experiments necessary. Moreover, we have two directions that will be explored in the context of NUMPEX. Firstly, the tool will be developed to be used in the characterization of parallel file systems of exascale systems (exascale-compliance); secondly, support for other benchmarks, not only for I/O, will be added. The idea is to be able to have a simple and automatic way of characterizing the performance of exascale platforms.

Already contributing Exa-DoST teams: MIND, SODA

Maturity level: TRL 7-8

Demonstrated scalability: 100s of nodes

WPs expected to contribute: WP3

Joblib is a library developed by the MIND team, aimed at providing simple tools to run embarrassingly parallel computation, passing seamlessly between single and multiple nodes runs. It is a set of tools to provide lightweight pipelining in Python. In particular:

- Transparent disk-caching of functions and lazy re-evaluation (memorize pattern).

- Simple interface for embarrassingly parallel computing.

Joblib is optimized to be fast and robust on large data in particular and has specific optimizations for NumPy arrays. It is BSD-licensed.

Actions expected in project

In the context of Exa-Dost, our goal is to make joblib an exascale-ready tool for handling simple parallel pipelines for a variety of applications. On-going actions related to NumPEx are along 4 main axis:

- Extending the available backends in joblib to allow more advanced workload repartition. Importantly, links with the StarPU team will allow us to develop fine-grain workflow management.

- Improve the support of resource management and constraints in joblib with task annotation.

- Provide novel backends for caching, in particular in the context of large distributed computing. These backends will be based either on centralized database systems or distributed file systems developed in the WP3.

- Provide an API for seamless checkpointing in Python. Checkpointing is a critical part when developing distributed applications, either for fault tolerance or saving different system states for result inspections (performance or analysis).

Already contributing Exa-DoST teams: DataMove

Maturity level: Used in different projects (national, EU)

Demonstrated scalability: 30K cores – Ran on Juwels, Jean-Zay, Marenostrum and Fugaku

WPs expected to contribute: WP2, WP3

Melissa is a software developed by DataMove for online processing of data produced from large scale ensemble runs (sensibility analysis, data assimilation, deep surrogate training).

Actions expected in project

On-going work related to NumPex are along 2 main axis:

- Data transfers: study the benefits of using ADIOS2 to transfer data from clients to servers (NxM data transfers) instead of the current implementation based on ZMQ. Benefits include:

- Performance gain as ADIOS2 is expected to better leverage high performance networks than ZMQ

- Extended capabilities for NxM data transfers relying on ADIOS2 features.

- Simulation Based Inference: join work with Statify team to investigate if Melissa could be extended to support large scale Simulation Based inference (ensemble run + online learning using reversible diffusion neural networks).

Eventually in the context of Exa-DoST our goal is to make Melissa an exascale-ready tool for handling large scale ensemble runs for a variety of applications.

Already contributing Exa-DoST teams: DSSI

Maturity level: TRL 7

Demonstrated scalability: was used in production on CEA’s supercomputer

WPs expected to contribute: WP1, WP2, WP4

NFS-Ganesha is a NFSv3/NFSv4.x/9p.2000L server running in user space and was originally developed at CEA. Industrial partners including IBM, RedHat and Panasas joined the project. NFS-Ganesha is now very active in the project development and evolution. NFS-Ganesha is part of Fedora 21. NFS-Ganesha has a highly layered architecture. Top layers manage supported remote file system protocol. The lowest layers, File System Abstraction Layers (FSALs), provide dedicated support for various file systems. Each FSAL is delivered as a shared object, dynamically loaded as the server start. A single server can load and use several FSAL at the same time. NFS-Ganesha also has layers dedicated to aggressive metadata caching and state management (making states acquired via different protocols interoperable, a NLMv4 lock can block a NFSv4 or a 9p.2000L lock if the same object is accessed and locked). Within SAGE it has been used as a backend of NFS-Ganesha, to implement a high performance POSIX namespace over IO-SEA objects. In this scope, it is used as an ephemeral server in the IO-SEA software stack.

Actions expected in project

During the timeline of Exa-DoST, we plan to use NFS-Ganesha as a “Swiss Army knife” for building file systems. The software is quite versatile, and its architecture makes it possible to easily plug any storage system behind NFS-Ganesha. In particular, NFS-Ganesha now has full support for addressing objects store through a POSIX interface (via the NFS protocol).

Already contributing Exa-DoST teams: MdlS, DataMove

Maturity level: TRL 7

Demonstrated scalability: 300+ nodes on Adastra

WPs expected to contribute: WP1, WP2, WP4

The PDI data interface is a tool developed at MdlS that supports loose coupling of simulation codes with libraries. The simulation code is annotated in a library-agnostic way, and then HPC data libraries can be used from the specification tree without touching the simulation code. This approach works well for several concerns, including parameters reading, data initialization, post-processing, result storage to disk, visualization, fault tolerance, logging, inclusion as part of code-coupling, inclusion as part of an ensemble run, for which many PDI plugins have been developed. PDI is deployed in production in multiple European Large scale HPC simulation codes.

Actions expected in the project

During the timeline of Exa-DoST, we plan to:

- Expose device data directly to PDI. PDI will manage data transfer between the host and device with this functionality. This way, we can have a more flexible way of getting device data for in-situ analytics.

- Develop new plugins in PDI: one idea is to support Damaris as a PDI plugin to have dedicated processes for the data movement rather than using the same processes as the simulation.

- Optimize current plugins for more functionalities. For example, we will further develop the FTI plugin for checkpointing.

Already contributing Exa-DoST teams: DSSI

Maturity level: TRL5-TRL6

Demonstrated scalability: up to petabytes (this is storage system related software)

WPs expected to contribute: WP1, WP2, WP4

Phobos is an open-source object store developed at CEA (DSSI) with a focus on long-term storage and tape management. It is designed as fully distributed and scalable, making it possible to spread services among numerous IO dedicated machines. Based on open protocol (such as S3/Swift) and open storage format (such as LTFS) to avoid vendor locking and ensure long term access to the stored information, its design allows the use of various databases, from relational DB engines (PostGreSQL) to NOSQL engines (MongoDB) and key-value stores (REDIS). Phobos architecture’s basements are “backend modules / plugins”, available as shared libraries, making Phobos capable of adapting to multiple hardware solutions.

Actions expected in project

During the timeline of Exa-DoST, we plan to provide an open-source solution to object store based storage. Object store is an important path to be followed for it offers the required scalability and capacity for managing the “huge storage” for exascale system (parallel filesystems such as Lustre may have issues to scale up to the Exascale requirements).

Already contributing Exa-DoST teams: DSSI

Maturity level: TRL7

Demonstrated scalability: in production at the CEA’s EXA-1 supercomputer

WPs expected to contribute: WP1, WP2, WP4

RobinHood Policy Engine is a versatile tool developed at CEA (SISR) to manage contents of large file systems. It maintains a replica of filesystem metadata in a database that can be queried at will. It makes it possible to schedule mass action on filesystem entries by defining attribute-based policies, provides fast ‘find’ and ‘du’ enhanced clones, and gives to administrators an overall view of filesystem contents through its web UI and command line tools. Originally developed for HPC, it has been designed to perform all its tasks in parallel, so it is particularly adapted for running on large filesystems with millions of entries and petabytes of data. But of course, it is possible to take advantage of all its features for managing smaller filesystems, like ‘/tmp’ of workstations. IO-SEA will leverage RobinHood by using it as a building brick for developing the Vertical Data Placement Engine, taking advantage of the experience gained by developing RobinHood as well as existing mechanisms and algorithms.

Actions expected in project

During the time-line of Exa-DoST, we plan to provide a versatile utility when it becomes necessary to trigger automated actions on files and directories within a filesystem as they meet some identified characteristics. The most recent developments in RobinHood made it possible to operate on objects stored in object stores as well as filesystems.

Already contributing Exa-DoST teams: MIND, SODA

Maturity level: TRL8

Demonstrated scalability: 100s of nodes.

WPs expected to contribute: WP3

scikit-learn is a reference library for machine learning in Python, developed at Inria. It provides a simple and robust API to all the traditional ML stack, and in particular:

- Simple and efficient tools for predictive data analysis

- Accessible to everybody, and reusable in various contexts

- Built on NumPy, SciPy, and matplotlib

- Open source, commercially usable – BSD license

Actions expected in project

During the timeline of Exa-DoST, we plan to improve the scikit-learn support for very large datasets and distributed runs, as well as its interfacing with exascale workflow. In particular, our actions will aim at:

- Improving the support of the array API with distributed data formats such as dask arrays, which support lazy operations that can be efficiently distributed.

- Improving the support of the partial_fit API, which would allow us to integrate scikit-learn in distributed workflows to analyze the output of large simulations or ensembles of simulations.

Already contributing Exa-DoST teams: KerData

Maturity level: TRL 3

Demonstrated scalability: Able to simulate the behavior of a 10PB Lustre file-system, and to replay ~5k jobs in ~20 minutes.

WPs expected to contribute: WP1, WP4

Fives is a simulator of high-performance storage systems based on WRENCH and SimGrid, two state-of-the-art simulation frameworks. In particular, Fives can handle the model of a parallel file system such as Lustre and a computing partition, and simulate a set of jobs performing I/O on the resulting HPC system with a high accuracy. Within Fives, a job model is designed to represent a history of jobs and submit them to a job scheduler (conservative backfilling strategy available in WRENCH). A model of an existing supercomputer, Theta at Argonne National Laboratory, and a reimplemented version of its Lustre file system have been developed in this simulator. On a class of jobs, Fives is able to reproduce the I/O behavior of that system with a good level of accuracy compared to real-world data.

Actions expected in project

During the timeline of Exa-DoST, we plan to extend Fives capabilities in several ways. Firstly, at the job model level, Fives is limited to very basic information on the I/O behavior of applications (total volume read or written, total I/O time). An evolution within Exo-DoST will be to augment this model so as to be able to describe I/O patterns more precisely. Consequently, Fives will be enhanced to take this new information into account, so as to improve the accuracy of our simulator. On the infrastructure model side, we also plan to implement new emerging storage systems such as DAOS, with the aim of having sufficient abstractions to simulate a wide variety of high-performance storage systems.

Consortium

The consortium gathers 11 core research teams, 6 associate research teams and one industrial partner. They cover the two sides of the domain of expertise required in the project. On one hand, computer science research teams bring expertise on data-related research on supercomputing infrastructures. On the other hand, computational science research teams bring expertise on the challenges faced and handled by the most advanced applications. These teams represent 12 of the major French establishments involved in the field of data handling at Exascale. Below is a brief description of the core teams.

| Core teams | Institutions |

|---|---|

| DataMove | CNRS, Grenoble-INP, Inria, Université Grenoble Alpes |

| DPTA | CEA |

| IRFM | CEA |

| JLLL | CNRS, Observatoire de la Côte d’Azur, Université Côte d’Azur |

| KerData | ENS Rennes, Inria, INSA Rennes |

| LESIA | CNRS, Observatoire de Paris, Sorbonne Université, Université Paris Cité |

| LAB | CNRS, Université de Bordeaux |

| MdlS | CEA, CNRS, Université Paris-Saclay, Université de Versailles Saint-Quentin-en-Yvelines |

| MIND | CEA, Inria |

| SANL | CEA |

| SISR | CEA |

| TADaaM | Bordeaux INP, CNRS, Inria, Université de Bordeaux |

| Associated teams | Institutions |

| CMAP | CNRS, École Polytechnique |

| IRFU | CEA |

| M2P2 laboratory | CNRS, Université Aix-Marseille |

| Soda | Inria |

| Stratify | CNRS, Grenoble-INP, Inria, Université Grenoble-Alpes |

| Thoth | CNRS, Grenoble-INP, Inria, Université Grenoble-Alpes |

| DataDirect Network (DDN) company |

Our Teams

DataMove is a joint research team between CNRS, Grenoble-INP, Inria and Université Grenoble Alpes and is part of the Computer Science Laboratory of Grenoble (LIG, CNRS/Université Grenoble Alpes). Created in 2016, DataMove is today composed of 9 permanent members and about 20 PhDs, postdocs and engineers. The team’s research activity is focused on High Performance Computing. Moving data on large supercomputers is becoming a major performance bottleneck, and the situation is just worsening from one supercomputer generation to the next. The DataMove team focuses on data-aware large scale computing, investigating approaches to reduce data movements on large scale HPC machines with two main research directions: data aware scheduling algorithms for job management systems, and large scale in situ data processing.

DPTA CEA/DAM is recognized in the HPC community for the realization of massively parallel multi-physics simulation codes. The team from the Department of Theoretical and Applied Physics (DPTA) of the DIF, led by Laurent Colombet, developed in collaboration with Bruno Raffin (director of research at Inria and DataMove member) a high-performance in situ component to a simulation code called ExaStamp, which will serve as a starting point for contributions to the Exa-DoST project dedicated to in situ processing.

The Institute for Magnetic Fusion Research is the main magnetic fusion research laboratory in France. For more than 50 years, it has carried out research on thermonuclear magnetic fusion both experimental and theoretical. The IRFM team brings its HPC expertise to the project through the 5D gyrokinetic code GYSELA that it has been developing for 20 years through national and international collaborations with a strong interaction between physicists, mathematicians and computer scientists. With an annual consumption of 150 million hours, the team is already making intensive use of available national and European petascale resources. Because of the multi-scale physics at play and because of the duration of discharges, it is already known that ITER core-edge simulations will require exascale HPC capabilities. GYSELA will serve to build relevant illustrators for Exa-DoST, to demonstrate the benefits of Exa-DoST’s contributions (i.e., how the libraries enhanced, optimized and integrated within Exa-DoST will benefit GYSELA-based application scenarios).

JL Lagrange Laboratory leads the French contribution to the SKA1 global observatory project, contributes to the procurement of its two supercomputers (SDP) and the establishment of SKA Regional Data Centers (SRCs). JLLL is part of the Observatoire de la Côte d’Azur (OCA, CNRS/Université Côte d’Azur), an internationally recognized center for research in Earth Sciences and Astronomy. With some 450 staff, OCA is one of 25 french astronomical observatories responsible for the continuous and systematic collection of observational data on the Earth and the Universe. Its role is to explore, understand and transfer knowledge about Earth sciences and astronomy, whether in astrophysics, geosciences, or related sciences such as mechanics, signal processing, or optics. One of the core contributions of OCA to SKA is done through the SCOOP Agile SAFe team, working on hardware/software co-design for the two SKA supercomputers.

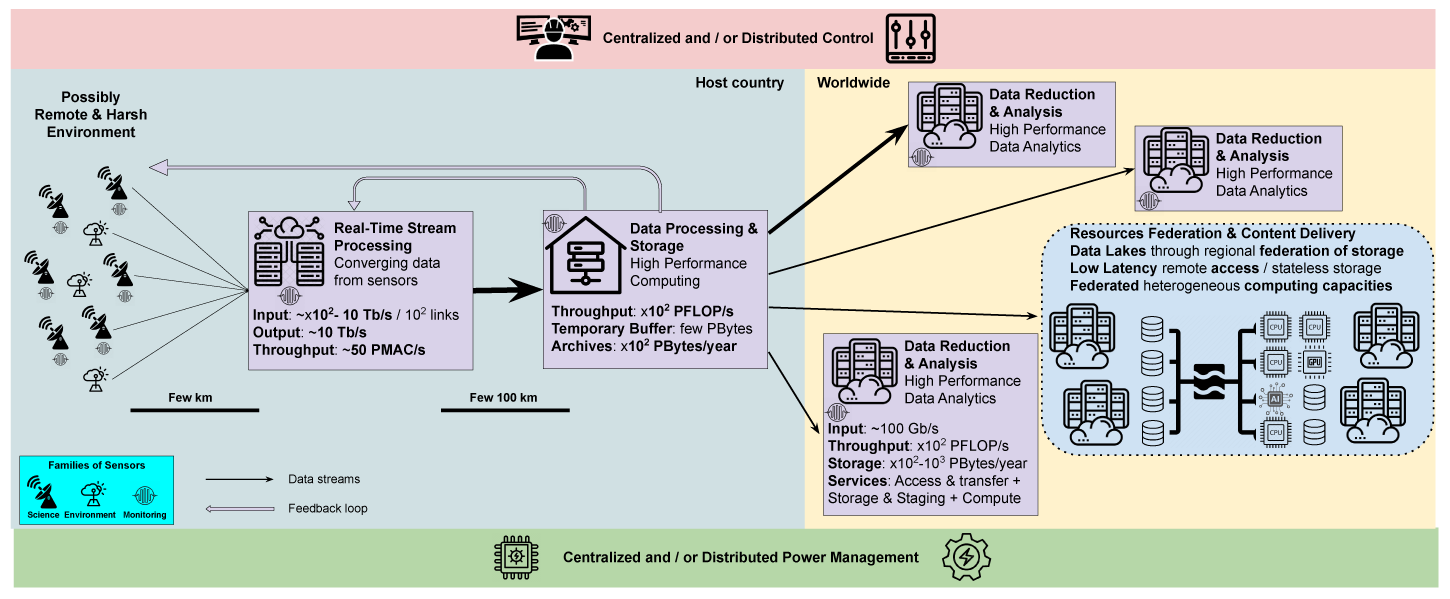

KerData is a joint team of the Inria Center at Rennes University and of The french laboratory for research and innovation in digital science and technology (IRISA, CNRS/Université de Rennes 1). Its research is focusing on designing innovative architectures and systems for data I/O, storage and processing on extreme-scale systems: (pre-)Exascale high-performance supercomputers, cloud-based and edge-based infrastructures. In particular, it addresses the data-related requirements of new, complex applications which combine simulation, analytics and learning and require hybrid execution infrastructures (supercomputers, clouds, edge).

The Laboratoire d’Études Spatiales en Astrophysique is strongly engaged in low-frequency radio-astronomy with important responsibilities in the SKA and it precursor NeNuFar, together with a long history of using major radio-astronomy facilities such as LOFAR and MeerKAT. LESIA is part of Observatoire de Paris (OBS.PARIS, CNRS/Université de Paris-PSL), a national research center in astronomy and astrophysics that employs approximately 1000 people (750 on permanent positions) and is the largest astronomy center in France. Beyond their unique expertise in radio-astronomy, the teams from OBS.PARIS bring strong competences in giant instruments design and construction, including associated HPC / HPDA capabilities, dedicated to astronomical data processing and reduction.

The Laboratoire d’astrophysique de Bordeaux has been carrying research & development activities for SKA since 2015 to produce detectors for the band 5 of the SKA-MID array. On-site prototype demonstration is scheduled in South Africa around mid-2024. This is currently the central and unique French contribution to the antenna/receptor hardware/firmware. In addition, LAB wishes to strengthen its contribution to SKA through relevant activities for SKA-SDP. Generally speaking, scientists and engineers at LAB have been working together on radio-astronomy science, softwares, and major instruments for several decades (ALMA, NOEMA, SKA…), producing cutting-edge scientific discoveries in astrochemistry, stellar and planetary formation, and the study of the Solar system giant planets.”

The Maison de la Simulation is a joint laboratory between CEA, CNRS, Université Paris-Saclay and Université Versailles Saint-Quentin. It specializes in computer science for high-performance computing and numerical simulations in close connection with physical applications. The main research themes of MdlS are parallel software engineering, programming models, scientific visualization, artificial intelligence, and quantum computing.

MIND is an Inria team doing research at the intersection between statistics, machine-learning, signal processing with the ambition to impact neuroscience and neuroimaging research. Additionally MIND is supported by CEA and affiliated with NeuroSpin, the largest neuroimaging facility in France dedicated to ultra-high magnetic fields. The MIND team is a spin-off from the Parietal team, located in Inria Saclay and in CEA Saclay.

SANL is a team from the computer science department (DSSI) of CEA, led by Marc Pérache, CEA director of research, developing tools to enable simulation codes to manage inter-code and post-processing outputs on CEA supercomputers. This team is involved in IO for scientific computing code through the Hercule project. Hercule is an IO layer optimized to deal with a large amount of data at scale on large runs. Hercule manages data semantics to deal with weak code coupling through the filesystem (inter-code). We also studied in-situ analysis thanks to the research project PaDaWAn.

The SISR team at CEA is in charge of every data management activity inside the massive HPC centers hosted and managed by CEA/DIF. Those activities are quite versatile: designing and acquiring new mass storage systems, installing them, and maintaining them in operational conditions. Beyond pure system administration tasks, the SISR team develops a large framework of open-source software, provided as open-source software and dedicated to data management and mass storage. Strongly involved in R&D efforts, the SISR is a major actor in the technical and scientific collaboration between CEA and ATOS, and is part of many EuroHPC funded projects (in particular the SISR drives the IO-SEA project).

TADaaM is a joint research team between the University of Bordeaux, Inria, CNRS, and Bordeaux INP, part of the Laboratoire Bordelais de Recherche en Informatique (LaBRI – CNRS/Bordeaux INP/Université de Bordeaux). Its goal is to manage data at system scale by working on the way data is accessed through the storage system, transfer via high-speed network or stored in (heterogeneous) memory. To achieve this, TADaaM envisions to design and build a stateful system-wide service layer for data management in HPC systems, which should conciliate applications’ needs and system characteristics, and combine information about both to optimize and coordinate the execution of all the running applications at system scale.

Save the Date

Exa-DoST events

Discover the next Exa-DoST events: our seminars and conferences, as well as partner events

january, 2026

News

Exa-DoST latest news

The Team

The Exa-DoST Team

Discover the members

Contact Us

Stay in contact with Exa-DoST

Leave us a message, we will contact you as soon as possible