Find all the information about Exa-SofT here.

Linear algebra libraries lie at the core of scientific computing and artificial intelligence. By rethinking their execution on hybrid CPU/GPU architectures, new task-based models enable significant gains in performance, portability, and resource utilization.

Libraries for solving or manipulating linear systems are used in many fields of numerical simulation (aeronautics, energy, materials) and artificial intelligence (training). We seek to make these libraries as fast as possible on supercomputers combining traditional processors and graphics accelerators (GPUs). To do this, we use asynchronous task-based execution models that maximise the utilisation of computing units.

This is an active area of research, but most existing approaches face the difficult problem of dividing the work into the ‘right granularity’ for heterogeneous computing units. Over the last few months, we have developed several extensions to a task-based parallel programming model called STF (Sequential Task Flow), which allows complex algorithms to be implemented in a much more elegant, concise and portable way. By combining this model with dynamic and recursive work partitioning techniques, we significantly increase performance on supercomputers equipped with accelerators such as GPUs, in particular thanks to the ability to dynamically adapt the granularity of calculations according to the occupancy of the computing units. For example, thanks to this approach, we have achieved a 2x speedup compared to other state-of-the-art libraries (MAGMA, Parsec) on a hybrid CPU/GPU computer.

Linear algebra operations are often the most costly steps in many scientific computing, data analysis and deep learning applications. Therefore, any performance improvement in linear algebra libraries can potentially have a significant impact for many users of high-performance computing resources.

The proposed extensions to the STF model are generic and can also benefit many computational codes beyond the scope of linear algebra.

In the next period, we wish to study the application of this approach to linear algebra algorithms for sparse matrices as well as to multi-linear algebra algorithms (tensor calculations).

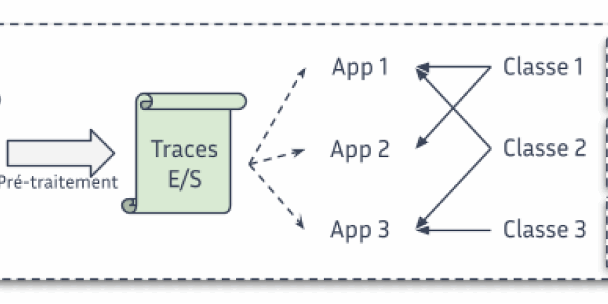

Adapting granularity allows smaller tasks to be assigned to CPUs, which will not occupy them for too long, thus avoiding delays for the rest of the machine, while continuing to assign large tasks to GPUs so that they remain efficient.

Figure: Adjusting the grain size allows smaller tasks to be assigned to CPUs, which will not take up too much of their time, thus avoiding delays for the rest of the machine, while continuing to assign large tasks to GPUs so that they remain efficient.

© PEPR NumPEx

NumPEx Newsletter

Subscribe to our newsletter to stay informed on the latest breakthroughs in High-Performance Computing, Exascale research, and cutting-edge digital innovations.